A Path Blocked

Ok, last path metaphor, I promise.

Plus, while it was extremely maddening while it was happening, it was actually resolved pretty quickly and could have been resolved three different ways. Really, other than its temporary effect, I probably shouldn’t say anything.

Before all that happened, I had been slowly resolving little issues bit by bit.

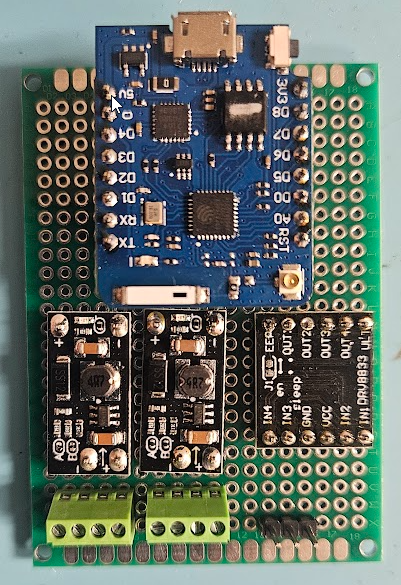

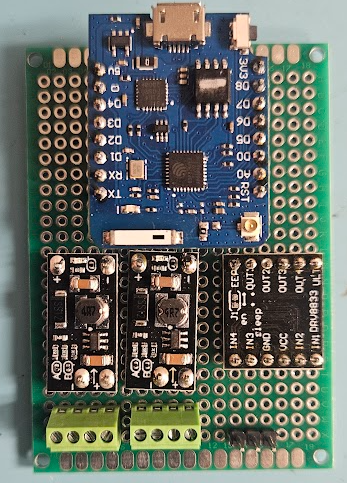

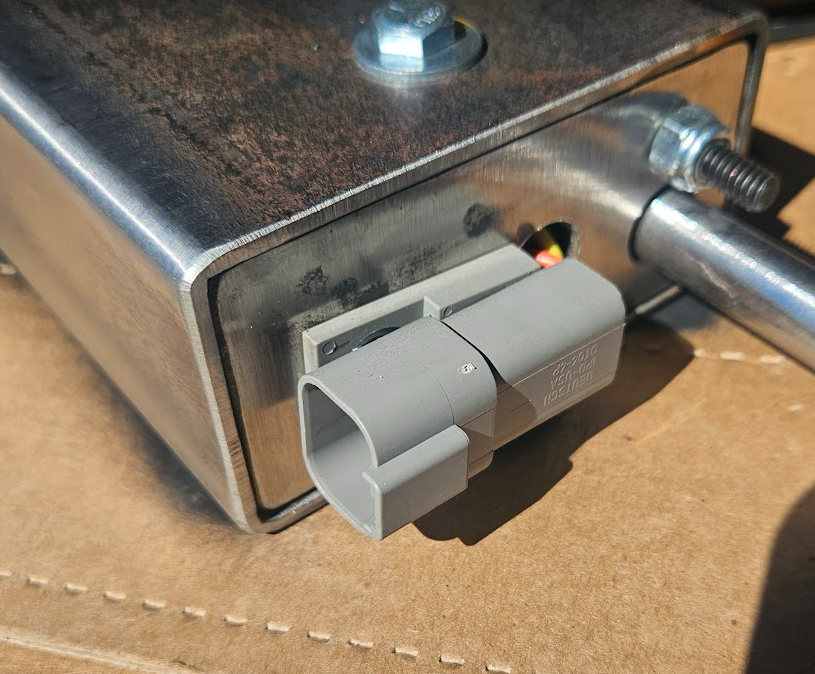

I have assembled what might be V1.0 of the Activator hardware board. The Trigger hardware board will probably be very similar, if not identical. Really, identical board with different software could be ideal.

I say might because I find that I do not care for these particular green terminal strips. When I ordered them, I was expecting larger units. There is not a good sense of scale here, but these are eyeglasses sized screws. Actually, you can see that the screws are about the same size as the solder pads on the circuit board, which are about 0.075″ in diameter. I have already cross threaded one by opening it too far.

Otherwise, from left the right, the first terminal strip is for the battery negative and positive and the external power switch. The next strip is for the plunger reset warning light and the plunger sensor switch. The three pin header is for the servo that trips the plunger.

Above the connectors, left to right are the 5 volt regulator to run the controller, the 12 volt regulator to run the warning light and possible future things and a dual H-bridge board that currently drives just the warning light.

Finally, above that is the star of the show, a clone of the unbiquitous Wemos D1 Mini Pro, so called Pro because it has an external antenna connector, which will be handy since this board will be inside a steel box.

I had two power mishaps with this board. Really, it was one power mishap that caused two problems.

When I was researching what equipment to shoehorn into this system to implement wireless, I had some realizations that could be visualized like rungs on a ladder. First, I knew that my controller board was necessarily going to have to take up some space and my Activator device is pretty crowded inside already.

One step up on the ladder.

The industrial relay that protects the lock motor, while a very robust device, is at it’s heart a very simple device that takes to continuous power from outside and limits it to a short half second or so pulse to the lock motor. I will be providing that lock motor power locally now, through my controller board, which is smart and can do the time limit function. The industrial relay is also pretty big, so removing it might be enough for both my controller and the battery.

Next step up on the ladder.

The lock motor runs on 12 volts. I did some measurments and found that during it’s brief moment of activation, it pulls about 4 amps. If I limit it to less than about 3 amps, it doesn’t pull hard enough to reliably trip the plunger. That is not a terrible amount of current, but as I look around for commerical off the shelf 12V 4A lithium batteries with easy connections and easy charging solutions, they all tend to be kinda big. Way bigger than the space vacated by the timer relay, leaving no space for the controller board. Batteries of other chemistries are even bigger and don’t work as well, so I didn’t even look.

DC to DC boost converters are thing these days. Actually, I have had a really successful history with multivoltage DC to DC converters back in my robotics days. Unfortunately, those blogs were lost ages ago. Anyway, I shop for converters and in a field absolutely flooded with converters for 12 volts INPUT, the few that I could find with 12V output tended to be physically large multivoltage output units for 48V+ input or if they are boost converters that work at smaller inputs, they are never more than 1A out. There are a few exceptions in the $50 range and I really want to avoid those if I can.

I did play with the *voltage* on the car lock and found that, so long as it had plenty of current available, it would reliably work all the way down to just under 6 volts. 7.4 volt LiPO batteries are common and RC car batteries have commonly available connectors and chargers. I found some reasonably high Ah rated batteries and a nice charger that wasn’t stupid expensive and got them on the way, along with some appropriate connectors.

Next step up on the ladder.

Next, I knew I needed to power the controller. I have a couple of options. I can power it from the USB jack with a converter connected to the battery, but it seems more elegant and controllable to supply 5 volts to it externally. I have batteries coming and I did find some nice 5V 1A DC-DC converters in my earlier shopping, so I found them again and ordered a handful. They came in a package of 10, very inexpensive.

Next step up on the ladder.

Big epiphany, with the controller board, I can directly operate a servo! This eliminates the need to ‘protect’ the lock motor and a servo is significantly smaller than the lock motor. I can power it directly from the board or even directly from the battery if the 5V 1A supply is not enough. That will also ensure I have more room for my RC car battery.

Next step up on the ladder.

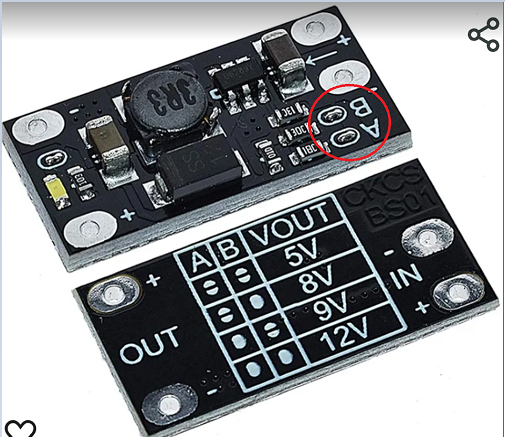

I had tested the nice big warning light that I want to put on the Activator to remind people to reset it. At the time I chose this one, everything was running on 12 volts, so it made sense to get 12 volt lights. I got a variety of colors. I particularly expect to use red for the Activator light and red, yellow and green on the Control Box. Experimentally, I connected a red light to 7.4 volts from my bench power supply. It lights up, but not very brightly. It will almost certainly be hard to see outside even at full brightness. I need 12 volts for at least the light. Luckily, the same DC-DC converters I ordered above are actually adjustable for 5, 8, 9 or 12 volts output, so other than having to add it to the board, problem solved.

Next step up on the ladder.

This week, I had some time to tinker and got the board built and populated. I don’t think I connected power to it before verifying the power, but more on that in a minute. With the controller board out, I connected one of my batteries and found that the 5V board was outputting 7.9 volts. The 12V board was good, but 7.9V is not 5V.

The default tiny zero ohm resistor jumper settings on the boards is for 12V, so for the 5 volt board, I just removed both of them. I looked carefully to ensure there was not a solder bridge. I alternately shorted them to ensure they they indeed changed the voltage. Only with both shorted did they provide the proper 12V output. All three other settings were higher than they were supposed to be.

While I was looking up this chart to verify that I did indeed have the jumpers correct, I noticed the error of my ways. This little board is designed for 3.7 volt input.

At some point I briefly looked at 3.7 volt batteries and I’m sure these converter boards ended up in my search history at that point. Later when I just wanted converter boards, I remembered them but I was too high up the ladder to see the the voltage 🙂

As luck would have it, 3.7 volts works fine for me, other than the expense of having purchased some 7.4 volt batteries that I don’t technically need, at least not for this part of the project. My charger will work for them, too.

However, I might choose better DC-DC boards.

I mentioned that these are inexpensive boards. $10 for a 10 pack. The voltage selection chart silkscreen on the actual board is completely illegible. All four setting for A & B look identically blotchy. And for what it’s worth, the output power pins are spaced 0.4″ apart, but the input power pins look at a glance like they are 0.2″ apart, but they are not. They fall somewhere between 0.2 and 0.3″. The output seems to be good for about 6 watts. The specs are somewhat iffy, but hints that higher voltages are probably at less than the 1A specified for 5V. The examples they give all work out to slightly more than 5 watts. This is fine for my needs. I don’t expect to need more than 150mA for 12V.

Until I put Activator board together, I’d been running this controller on either a laptop USB or a USB power pack. Now that I had it put on the board and running on my bench supply standing in for the 3.7 volt batteries that were enroute, it was nice to leave it on the kitchen table where it was close enough to hear the servo cycle, but but off my desk which was cluttered enough with the Trigger and ControlBox modules so that I could hit the buttons on them and observe the display on the ControlBox. For some reason, I had to interact with the Activator. I don’t remember why. While I was near it, I smelled that classic ‘something is hot’ smell. Since I had thrown over voltages at it earler, I finger probed and discovered that the inductor on the 5V board was pretty hot to the touch. I immediately shut off the power supply. I pulled to control board off, turned on the power supply and noted that the voltage was indeed 5 volts, so that’s good.

I presumed that the little 1A supply was having trouble keeping the controller and the servo both supplied, so I rewired the servo to run directly off the battery. That is a little below spec for it; it is rated for 4.8 to 8.4 volts, but in testing, it worked fine and still pulls plenty strong. I do not expect it to be a limitation for the Activator sear. Of course for the testing, the controller was plugged back in and in just a little bit, I smelled ‘hot’ again. I probed the power boards again and while it was not as hot, the 5 volt board was definitely getting warm. I probed around on the controller and ZOWIE a chip on the board was hot enough to immediately cause pain. I removed power again and pulled the board out. It turns out to be the USB serial chip. Once it cooled off, I plugged it into a USB cable on my laptop and it got seriously hot in a couple of seconds, so it definitely has a problem.

As mentioned earlier, I don’t think I connected the 7.4 volt battery to the system with the controller in place, thus applying basically 8 volts to its 5 volt input, but I can’t authoritatively say never. Plus, after working for weeks under various conditions, it acted up the day it was running on the new carrier board. The CPU seemed to be working, by the way. The USB chip was smokin’ hot, but even before I discovered it was baking, the device was responding to ESP-NOW messages and operating the servo. I’m just not sure it would have lasted much longer.

Happily, I have one not in current use, so I could swap it out. It is a slight pain to update the MAC addresses in the software to use the new device, but at least this one doesn’t sterilize the air around it. As these are my favorite boards for this application, small cheap boards with the external antenna connector and not a lot of unecessary IO, I may need to order a few more of them. I do have some 40 pin ESP32s with external antenna connectors, so I’m not without a way forward if needed.

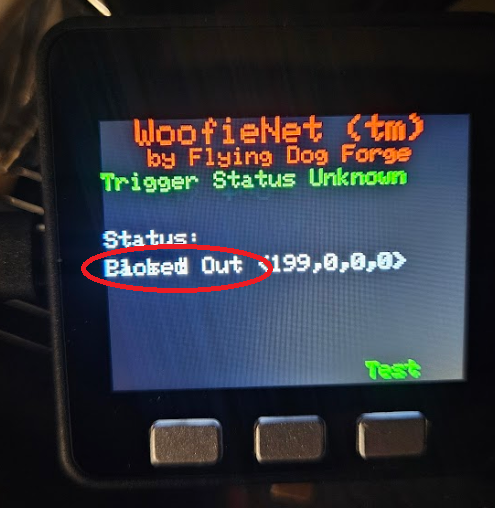

In other hardware news, I have been using a slick device with a built in screen for the ControlBox unit. I don’t know if this will be the final controller for the ControlBox, but it has it’s advantages.

It is an M5Stack Core. I originally got this for ESPHome use and HomeAssistant, only to find that it was not yet supported there. I have not rechecked lately. Anyway, it is an ESP32 in a nice case with a few built in goodies, most importantly for this, a 320×240 TFT display and three front mounted buttons. It also has a speaker, a TFCard slot and, while I’m not sure how long it can run on this, it has a 110mAh battery built in. I am thinking about building a little program to send a message to another module once a minute, with the other module logging the time and see how long it lives.

For use as a real ControlBox controller, it has one drawback. There is not a really strong way to secure external connections to it. It has a row of exposed pins on one side and exposed pin jacks on three sides. The pins are not ideal ones for reuse for my purposes and the pin jacks would be even more difficult to really secure the connectors to for a device that might get tripped over or dropped and has to travel on gravel roads in someone’s trunk or pickup bed. In the long run, I may need to use some descreet components for these setup.

But for now, it seems a good way to start, with a caveat.

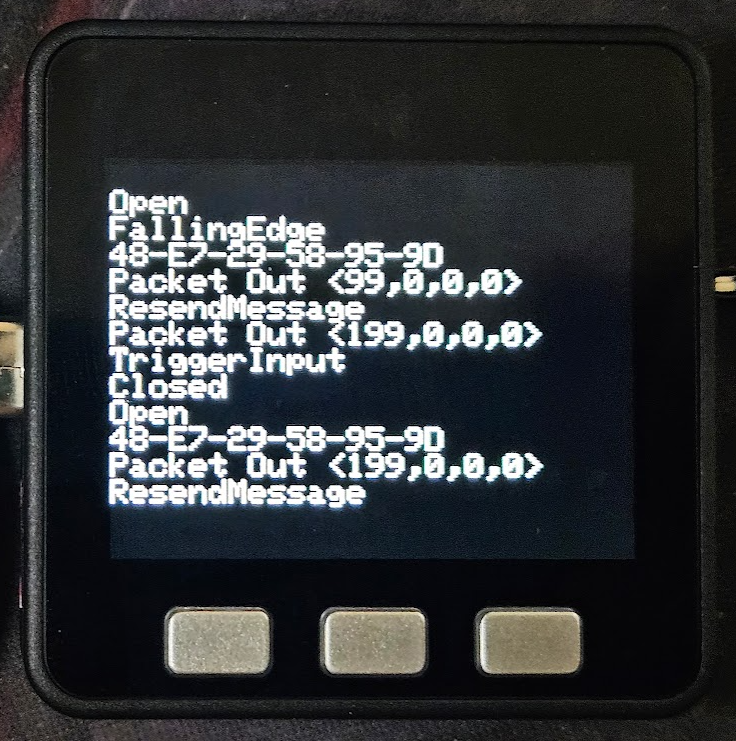

This was before I had it doing anything with text fields. I was sending stuff I would normally dump to Serial0 to the TFT instead.

I have found that, like many of these small hardware displays, or at least the libraries written for them, you often can’t just throw text at it and have it behave the way you would like it to, like scroll like a classic screen. On the screen as shown above, I can send one more line to it, then any subsequent lines will just continue to clobber that bottom line, text written on top of existing text.

Visuino supports text fields. The field is located anywhere on the screen by x-y pixel coordinates then prints text you provide with the font, size and color attributes your provide. But so far as I can find, there is no clear command. Subsequent text clobbers what’s already there. Argh.

I had been working on this issue reiteratively in what spare time I have for a few days now. I have a nice title bar and I intend to determine and display the status of the two (currently) available remote devices and provide a 3-4 line messages window of scrolling messages about whats going on, trigger received, trigger sent, test sent, device needs reset, etc. I have a label over one of buttons at the bottom of the screen and if you push that button, it sends a test trigger to the activator. All of these things work, until you put enough text somewhere to clobber what’s already there.

The only way I have found to clear the screen is to literally clear the entire screen, write it black, then rewrite all this stuff back to it. That seems completely ridiculous and it puts it on me to maintain a screen map somewhere. That’s not what I want to do. I want to make my hardware do stuff and print stuff on the screen to tell my users what my hardware is doing for them. The IDE should know how to make the *display* do stuff.

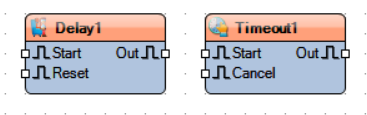

Sparse documentation is a recurring theme here. For example, these are two very similarly named components:

If you get context sensitive help for Delay, you get a Wiki page with thin but not entirely useless descriptions of the settings for the component and what the Start, Out and Reset pins do, as well as links to some example projects that happen use it.

Maybe Delay doesn’t do exactly what you are looking for. Logically, it seems like Timeout might do a similar kind of thing but attempt to get context sensitive help for Timeout and you just get:

It offers that you can search for it in other places, but so far, that has universally not helped on anything I have searched for. I suppose it could happen. Maybe I haven’t looked for the right thing yet.

Which brings me indirectly to my brief but severe irritation last night.

Regardless of this irritation with the documentation, I like the product. I was able to reach a working point with my devices very quickly. As always seems to be the case, a lot more time goes into the user interface than to the actual device (especially if you can’t figure out how it works). Anyway, I had been running on a 15 day trial version of Visuino Pro and there were two days left. I saw no reason to not proceed with the purchase. I looked at the version matrix and while I had been running the Pro on trial, I didn’t see anything in the Pro column that I was actually using, so I elected for the Standard version with a 1 year subscription for updates. The purchase, install and registration went through without a hitch. It automatically opened after installation and I pulled up the ControlBox project and played with it a bit. After a little while, I needed to open a second instance to make a companion change to the Trigger project. When did that, I noticed/remembered that I still had Visuino Pro installed and, fatally, decided to pull up control panel and remove it.

Long story short, had I removed Pro before installing the Standard version or reinstalled Standard after removing Pro, things would have been ok, but the removal of Pro removed all the components from the Visuino library folders. There was some minimal number of components that I guess are built in to the binaries and support for only the Arduino Uno R3, but my projects now spawned a huge list of errors, most of which were missing components and many of those were specific to my missing board. Once I had seethed down to being able to write without being overly abusive, I dashed off a note to Visuino support. I don’t remember exactly the details, but in short I wondered why the uninstall script would remove user files like all my components.

Interestingly, all of this was loaded on my work laptop, which heavily uses Microsoft OneDrive. Not long after my note to support was sent, OneDrive serendipitously popped up a dialog asking if I wanted to permanently delete the several thousand files I had deleted recently. I dove into that dialog and found that I could restore the folders that the Visuino uninstall script had deleted. I ran Visuino and everything was happy as it could be. I was was exhausted and shut everything down and went to bed!

By morning, I had a reply from Visuino support, patiently telling me that a reinstall would most likely fix it and that my own projects were safely stored elsewhere. I am certain that is true and I will probably do that because in restoring those folders, I am sure I now have some Visuino Pro components that I have technically not paid for. Then again, the program and/or license may be smart enough to prevent them from working. Shrug. As I noted when I looked through to version matrix, I wasn’t using any Pro components, which consisted mainly of FFTs and other such advanced data processing components. Interesting, but not applicable to anything I am likely to need for yanking on chains by novel means.

Where The Path Leads

I have made what I think is a lot of good progress with both Visuino and the hardware.

The Visuino sketch shown in the last post kind of worked regardless of being very wrong in a couple of places 🙂

I had made a comment on a video about the recent massive expansion of Visuino. I noted how my code basically ran once when the first signal was received after power up, but since that same value gets sent repeatedly, the received message never changes and the code never seems to see subsequent received messages. None other than company founder Boian Mitov replied, asking for my program and details so he could offer suggestions. I excitedly sent a (far too wordy, as is my modus operandi) email and expectantly waited. Of course, I continued working on it and found a (naive) workaround that (kinda) worked, though it would come to light later that it was not actually responding to *the* command, but rather *any* command.

Several days later, the reply came from Mitov, with apologies for the delay, but with a beta update of the software that had several changes, including how the CompareValue component works. Between the guidance provided and my own experimentation in the mean time, I was definitely set upon the proper path and that piece of code was *truly* working!

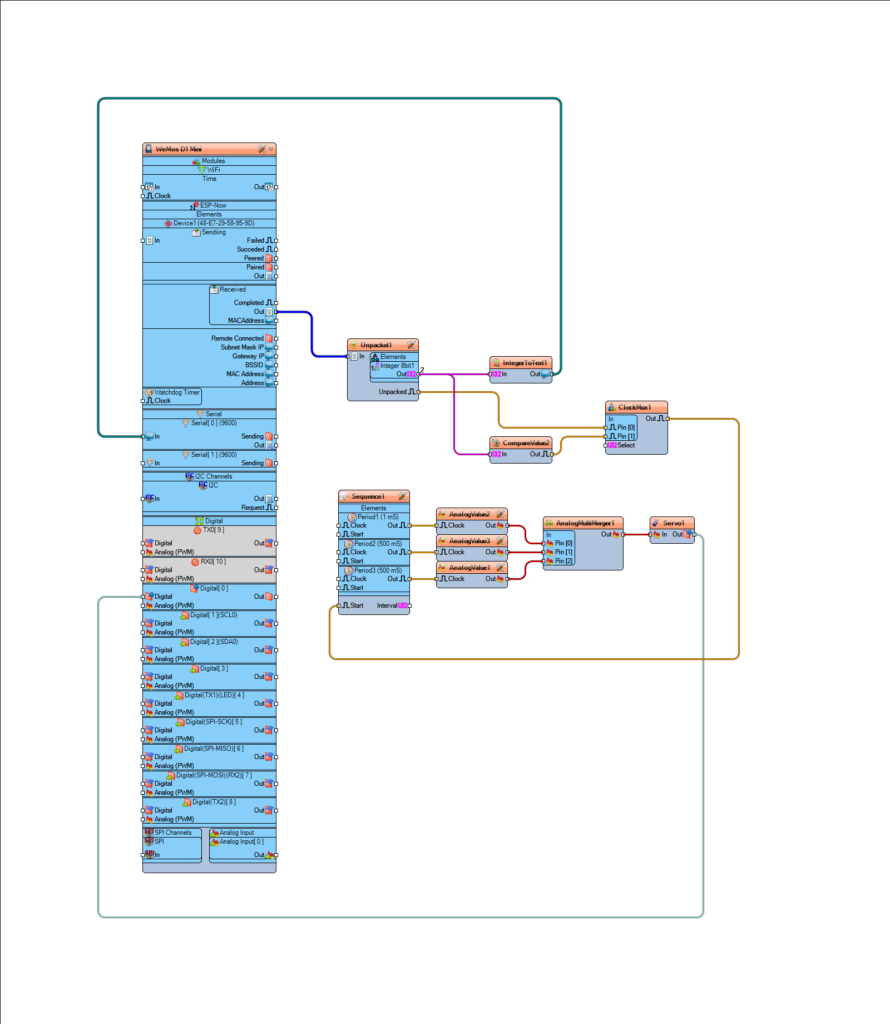

My current design has three main devices, the Trigger, the Activator and the ControlBox between them. I have three ESP8266 devices, appropriately labeled Trigger, ControlBox and Activator. Trigger looks for a button press and upon detecting it, sends a message indicating that to its ESP-NOW peer, the ControlBox. At this point, ControlBox literally just retransmits the received message to its other peer, the Activator. Activator receives the message, verifies that the message matches the command to act and then cycles a servo, which will trigger the mechanical yanking device.

ControlBox will also have a dry contact input so that Trigger is not necessarily needed in all cases. Eventually, ControlBox will have a display and buttons driving a menu system where various behaviors and parameters can be selected.

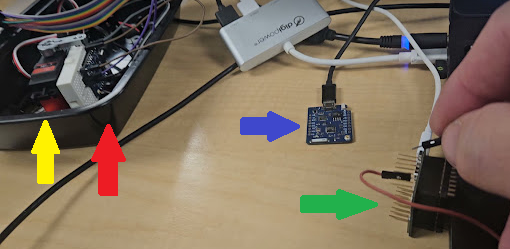

At one glorious point, all three ESP8266 boards were scattered across my desk, communicating wirelessly at a range of 10’s of centimeters. It looks more chaotic than it really is.

The Trigger device is circled in green, the ControlBox in red and the Activator in blue.

As it currently works, pressing the button on the Trigger sends a message (an integer, 199) to the ControlBox. At this point, the control box retransmits the received message unmodified to the Activator. If the received integer at the Activator is 199, it cycles the servo, which will one day soon trigger the spring loaded device.

Future features will include a sense switch in the activator to warn people at the ControlBox that it has not yet been physically reset. Because of the way stages are reset between competitors, some triggering devices can inadvertently trigger a moving target before the stage reset is completed, so being able to arm/disarm the Activator from the ControlBox is a necessary feature. Since all these devices will be run on batteries, a battery status indication at the ControlBox will help ensure that all devices are ready to go. Finally, the ControlBox will be able to sequence multiple Activators. This is a rarely needed feature, but if my device can do this, it will sell 🙂

The fun bits that I have learned along the way:

I need to accomodate either a normally open or normally closed input for the Trigger. The DigitalDemux component makes that pretty simple. The contact goes to the input of the demux. The select switch goes to a DigitalToInteger component to provide a selection for the demux. The outputs of the demux go to EdgeDetector components, one configured for rising edge and one configured for falling edge. The same logic will be duplicated for the electrical input of the ControlBox.

When I first implemented the ControlBox, I over complicated it. Out of hardware hacking habit, I tried to use the minimum number of bits possible. I had set up the Packet and Unpacket components to use 8 bit integers. This worked, but somewhere in the mix, an integer is a 32 bit value and wrestling with resolving that was becoming troublesome. I had a situation wherein the first byte of the full integer was being interpreted as the command byte and that first byte was *not* 199. Eventually, I realized that ESP-NOW sends a packet with a minimum of 36 bytes and whether I send a payload of 1 byte or 4 bytes makes about 10% difference in the entire message and dealing with a regular signed integer is simpler than trying to shoehorn my ancient bitbanger ways into the mix. Once I abandoned all the conversion necessary for 8 bit integers and literally connected the received output to the transmitter input, it just worked.

The Activator has been the problem child and was the original reason I posted the comment on the YouTube video. It needs to react to an ESP-NOW command message, but only the right command. Other commands might include a status request, a command to turn a light on or off or a command that sets an operational parameter. I can’t just assume any message is a command to activate. This is why I was struggling so with the the old CompareValue component. However, the new version works better and other advice from Mitov cleaned up everything else. The chain is very simple and straight forward now. The received message is tested to see if it is the activate command. If so, the EdgeDetect component converts that to a clock pulse, which activates the sequencer to cycle the servo.

I learned that the sequence works slightly differently than expected. The Analog Value is set immediately, then the sequence time elapses. I first presumed that this timer was started and ran, then at the *end* of the sequence, the AnalogValue would be asserted. Now that I realize the proper order, then of course that makes more sense than what I was thinking. However, before I figured that out, there was a noticeable delay between when the button was pressed and when the servo moved. With copious serial prints I was able to verify that the delay was not in the transmission or reception of the ESP-NOW message; that is essentially instantaneous. The delay came from my misinterpretation of the Sequence component. Originally, I was setting the servo to 0 for 10mS, then for 500mS setting it to 90 degrees, the setting it back to 0 for 500mS. The intent was to first ensure that the servo was in it’s home position, then move to 90 degrees, with the first 500mS to ensure it had time to move, then move back to 0 degrees, again with a 500mS time to ensure this had time to happen.

Wellllll… turns out we don’t need most of that. The Servo component includes an initial value, which apparently homes the servo automatically at startup and then the timing of the sequences was not where I thought they were. The AnalogValue for moving the servo is asserted immediately, then 500mS later, the return to zero value is asserted. This is much simpler than I first presumed and makes for MUCH more elegant process.

If I were to make any complaint about Visuino, it would be that we need some kind of programming guide to demystify the inner workings of these components. The examples in the plethora of available videos often show what to do to make a light blink or whatever, but rarely describes *why* a certain feature is used. For example, a demo on operating a servo had a DivideByValue between the selected AnalogValue and the Servo. Only after extensive experimenting did I figure out that this provides the appropriate fraction needed to scale the degree value requested to the range of PWM that the particular servo will respond to. In the absence of more precise guidance, one could easily assume that the Servo component scaled these degrees itself and would accept a degree value as input. Quite accidentally, “0” and “90” as direct inputs to my servo appeared to work properly, but it turned out to be not 90 degrees, but the full 270 degree range, viewed I would say “backwards”. 0 went to zero, but “90” was so far beyond the 0 to 1 range that it simply went to 270 degrees. As it is a digital servo capable of 360 degree rotation, it took the shortest path counter-clockwise to the requested position, as opposed to the long way, 270 degrees clockwise. Some documentation revealing that the servo requires a 0-1 range instead of a direct degree heading might have revealed this issue much earlier in the process.

As for the hardware, I began laying out prototype circuit boards for the system, starting with the Activator. This is how it turned out before I started wiring it up.

At the top is the ESP8266 board. In the middle are two boost regulator boards (more on that in a minute), a cheap motor driver board that will actually be used to drive the warning light, with room to drive a motor in the future. Along the bottom are connections for the battery, external power switch, sense switch for the punger and the light and finally, the header for servo to trip the sear.

With the power supply boards, I got a little too clever for my own good. They were spec’d out when I was contemplating 3.7V batteries. I don’t recall why I landed on 3.7 to start with, but I did. These boards take a 3.7V input and boost it to 5, 8, 9 or 12 volts, configurable by removing the jumpers A or B or both.

Somewhere in there, I decided to order 7.4V batteries instead of 3.7V. Luckily, I decided to test the voltages before firing up the board because running with 7.4V batteries, the he lowest available voltage is about 8 volts. I have 3.7V batteries enroute.

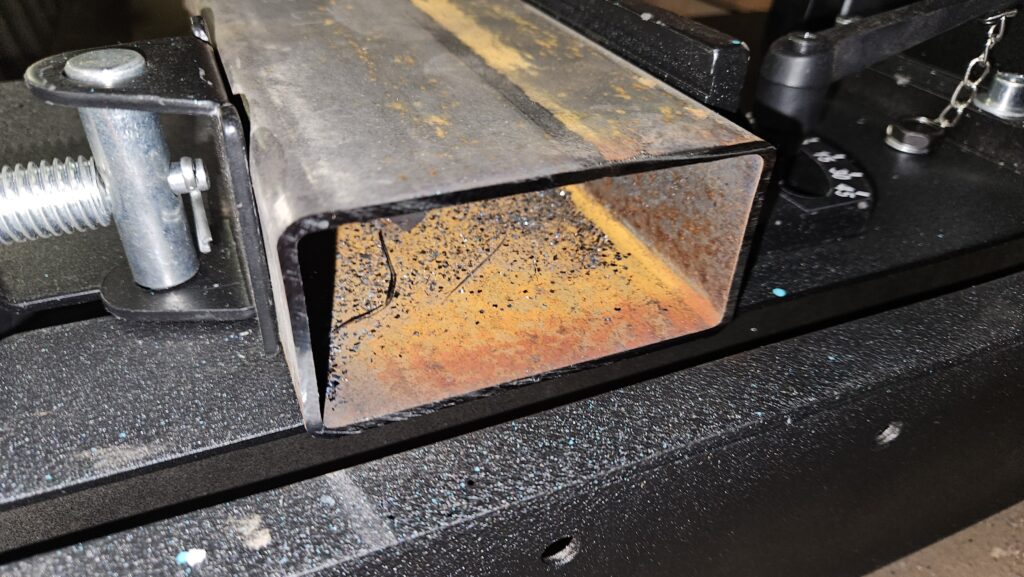

In other hardware news, I acquired a rather nice carbide metal cutoff saw. This thing makes amazing essentially mirror smooth cuts!

This 2×4 14ga tubing was cut in 10-15 seconds and looks like this!

This will definitely up the fabrication game.

The Path Less Taken

One can find plenty of derision online concerning the Arduino IDE. Shrug. Maybe I am not sophisticated enough to care that much about the occasional issue. I do have two main gripes about it. Once you start a compile, there does not appear to be a way to abort it. This comes up often for me because I will make some change that needs to be compiled and uploaded to test, then while the compiler is still building, I will see some other error that I need to correct. The best I can so is unplug the board so that at least the upload will fail. That brings up the other issue, although this may not be specifically an Arduino IDE issue so much as a general hardware issue. All of these devices that I am working with connect to the PC via USB. Sometimes, all the unplugging, replugging, pressing the reset button on the boards, etc, will cause the USB subsystem to kinda get lost. I don’t know if it’s the IDE, the UART chip drivers on the boards or what. Related to that, those UART chip drivers don’t always get along with USB 3.0. Since I do 99% of this work on a laptop that has two available USB jack, I use a USB hub that happens to have two USB 2.0 jacks and one USB 3.0. My current project requires me to develope on three interconnected devices at the same time and one of the available jacks on my laptop is occupied with my USB headset, it can be pretty easy to forget and plug something into the USB 3.0 jack on the hub. I realized this is a problem once the cards kind of stop responding. Thus far, it takes a reboot to restore trustworthy function.

So, besides all that, I find the Arduino IDE itself is just fine, at least until you have to communicate with something more complex, like a TFT display. The data being fed to the working display is generally not the issue; it’s getting the thing working in the first place. A problem that I had early on, though I have largely solved it by now, is that ESP-NOW is implemented a little differently in the supplied libraries for the ESP32 and the ESP8266. My intent was/is to put tiny ESP8266 boards in the remote devices and a more capable device equiped with a screen and some buttons driving a menu system as the controller. The example code provided for the ESP32 family is much closer to a good starting point for my project than the examples provided for the ESP8266.

The library names are slightly different, which is itself easy to fix, once you know the right library names to use. Trickier to solve, however, is that a very important data structure is slightly different and at least one constant name is different (ERR_OK vs ESP_OK). Luckily the incredibly helpful Programming Electronics Academy published a video on exactly this subject, using the example code from the ESP32 family and modifying it to run on an ESP8266, although I was not aware of it until I was a couple of days into trying to find and solve the problem myself.

Armed with this info and some time to figure out exactly what I needed to accomplish, I got two devices working and the devices can send and receive data from each other.

Next, the ControlBox device needed to be able to send it’s data only on demand. I replaced the delay loop with a few different implementations of detecting a button press. It’s roll as the ControlBox will require it to accept some kind of switch change to send that Activator the go command. Eventually, it will be able to respond to either a local switch or a remote ESP-NOW device sending the appropriate request.

With the button sorted out, I started the activator conversion. The current analog system, where we just throw voltage at a door lock motor to trip the activator. This fairly elegant and thus far very reliable. However, the lock motor is physically large and even though used only briefly, it is pretty power hungry as well, requiring an also physically large industrial timer relay to protect it. Converting this activation device to a servo will recover the space that will be better utilized by a battery in the new design and the servo will arguably require less power.

Getting a connected servo doing *something* itself fairly easy, but getting it to do precisely what I want it to is much more difficult.

As I never stop putting “ESP NOW” in the YouTube search, I stumbled across another way to do this stuff.

The video that introduced me to Visuino makes no mention of it in the title, but as I watched it, there was this visual IDE being used. It was almost mentioned in passing, but in very nearly 8 or 9 minutes real time, an ESP32 board and an ESP8266 board were set up by dragging virtual components onto the screen, interconnecting them with on-screen wires and a few settings in the components. One has a temperature/humidity sensor and the other a small OLED display. The display on one shows the temperature and humity data from the sensor on the other.

It is pretty easy to argue against using such packages from a strictly code efficiency point of view. Visuino produces code that is essentially impossible for the inexperienced programmer to follow and the code sizes produced would not be unfairly called monstrous. My most complex sketch to date (spoliler: I am using Visuino) is nearly 300K and doesn’t only slightly more than the 3K Arduino IDE code that preceded it. These ESP controllers have megabytes of RAM and FLASH to work with, so it is kind of a non-issue.

All is not perfectly rosey, but with an hour or so of judicious tinkering, I had a working receiver, receiving the data sent by my “old” Arduino code. Getting it to activate the servo based on receiving that data turned out to be tougher to figure out, though largely becase I struggled with the kind of object oriented approach after so much time in procedural methods.

The the knowledge gained by the receiver project, it was much easier to reconfigure the sender into Visuino and then to add a button to the sender.

Just this morning, I succeeded with the quick and dirty version of what will one day be deployed in the field, a Trigger device that will initiate the action, a ControlBox to intervene and/or modify before it sends the command on to the Activator.

The Trigger (green arrow) responds to a switch closure and sends a byte of data to the ControlBox (blue arrow) which resends it to the Activator (red arrow) which does a quick 90 degree cycle of the servo (yellow arrow).

For this quick demo, I am using all ESP8266 boards, specifically the Wemos D1 Mini Pro, which supplied with an external antenna connector. Since at least one of these devices will be inside a closed steel box, the external antenna is likely to be a requirement.

The 8266 boards are perfectly appropriate for the two far ends of the system and probably fine for the ControlBox as well, however I would like to put something with a screen and menu buttons on the ControlBox so that features can be selected in a friendly way. The list of features are still a work in progress list, but includes things such as being able to detect and add new devices and the ability to trigger multiple activators, maybe with a timing sequence between them. There quite a few controllers available that already have a screen and buttons and other features ready to go, taking away some of that development time required to make the interconnections between those peripherals and Visuino makes using those peripherals easier. Maybe.

One problem I face with Visuino is that, while there are a lot a supported devices, I already have several devices that would be appropriate to try, but they are not implicitely supported in Visuino. The Arduino IDE provides a pretty easy way to add support for new boards by providing a list of URLs where the supporting device descriptions can be pretty much transparently downloaded. There is a similar list viewable in Visuino, but thus far, I have not found a way to add to it.

Life is tough, but it’s tougher when you’re stupid…

Ok, maybe not stupid, but I did miss something pretty fundamental and I missed it early enough to waste a couple of days of development time. Also, there was a side quest through the quicksand that is Windows USB drivers.

As mentioned earlier, ESP-NOW is expected to fulfill a number of needs for my project. To recap, I have built a reliable target activating system applicable to several shooting sports I am involved in. It is reliable because it is electrically pretty simple, with some switches and relay providing the limited logic needed, a control box with said relays and switches and a battery and finally the connectors and cabling to hook it all up.

Removing those cables can be done with a wireless system that essentially doubles the built cost for the system. It would also be plenty reliable, but the cost is prohibitive, especially as I do intend to sell this system and I can’t make the price attractive and make a little money on it if I have to spend that much on the wireless technology.

The hardware for ESP-NOW is built in to microcontrollers that already support WiFi and are incredibly cheap. Furthermore, adding a microcontroller will let me replace a couple of other expensive parts while added even more features.

Of course, ya gotta get it working first.

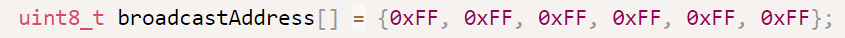

I had easily set up two devices where one could messages to the other. I found an easy to follow YouTube tutorial for two way communication between two devices and chose that one to implement for the next step. The tutorial had a lot of symetry to it. Two ESP32 devices, each with an environmental sensor (temperature, humidity and pressure) and a tiny OLED display. The environmental data from each device was displayed on the other. The sketches are identical on both devices other than the manual entry of each devices peer MAC address, the device it is sending data to. While some of the ESP-NOW tutorials included facilities to scan for peers and automatically record their MAC addresses, this one assumes you know the MAC address for each of your devices and even includes a super simple sketch to extract the mac of the board it runs on. I ran this sketch and recorded these MAC addresses in a notepad text file for later reference. The sketch requires the MAC address to be stored in an array variable and in the declarations area of the sketch, this array variable was created in this form…

… where you would substitute the MAC address octets of the peer device for 0xFF in each position. If you leave it all FF’s, it will work by broadcasting the data to all devices on the network and the ESP-NOW devices will actually listen. However, the receiving devices do not acknowledge receipt of the message, where as unicasting to a specific MAC address does. There are situations where broadcast is appropriate, but for my purposes, I’d rather have the acknowledgement.

In my notepad, I created this string of hex notation digits so that I could just copy and paste the MAC into any script I was working on.

I modified the sketch, simplified it, removing all the code to support the display and environmental sensor because I didn’t have those to connect to it. My sketch sent two bytes to each other. Eventually, my messages between units will also be just two bytes, on as a unit identifier, the other as a command or status. I could probably make that two nybbles and just send a single byte.

The trouble is that my devices would only send one way. I had intended one to be the Activator and one to be the Control Box. Under normal circumstances, the Control Box will send a command to the Activator to instruct it to trip immediately. The Activator will either periodically send a status or it will respond to a poll for status from the Control Box. In my case, however, communication was also only one direction. The sending unit did not have an acknowledgement and the receiving unit never received any messages. I did a few minor troubleshooting steps to ensure that my sketches were indeed identical other than the peer MAC addresses.

The next step was to swap out equipment. I was using one ESP32 for the Control Box, assuming I would want to add more features to that one eventually and they have more I/O pins. The Activator was a very inexpensive ESP8266 board and there was always a chance one might have a bad transmitter. When I started swapping boards and sketches around, the laptop would occasionally not drop the virtual comm port when a board was unplugged. It would sometimes take a reboot to clear this issue. I had two instances of the Arduino IDE running. Juggling two IDEs and two of about four available cards when sometimes the USB subsystem would basically stop responding and require a reboot to fix quickly grew tedious as hell. I reached a stopping point on the first day and had to drop it at least overnight.

The next day, I decided that, since I was fighting some weird issues, I would work with some ESP32 boards instead of the 8266’s. The libraries for ESP-NOW and WiFi are different between the two MCUs. It was just a variable wanted to eliminate. The situation was largely unchanged, including the USB virtual comm port issues. I had a new sympton there. Now sometimes, I could get two of the same virtual comm port number. A bit of research revealed that sometimes, the USB to serial chips on these boards will have the same serial number, at least as far as Windows Device Manager can tell, so it give them the same comm port. I could manually edit one of them to an unused port number and make them work, but the more I unplugged boards, the more often the entire subsystem would lock up. Reboots would take several minutes, then time out with an error “DRIVER POWER STATE FAILURE” and it would then reboot itself from the reboot process. It happened several times and took 5-10 minutes EACH TIME to complete a reboot. That was the clue I finally latched onto and worked on replacing the driver for the USB to serial chip on these boards, the Silicon Labs C210X series.

That is an easy download, but of course by now, things weren’t going to go easy. Eventually, I discovered that there were three separate sets of Silicon Labs drivers installed on my laptop. I presume there was some subtle difference between some of the ESP boards I have plugged into this laptop and each installed it’s own ‘most recent’ drivers. I deleted them all and installed the fresh download from Silicon Labs, dated in 2023. This seemed to settle down the USB traumas.

Of course, they would still only communicate in one direction.

Now that I felt like I could trust the USB stuff, I added some code to print the device’s MAC address and peer MAC address during the setup() function. It never printed. I added other tag prints to show where in setup execution was. Those never printed. It was like it was skipping the setup() function. Google again.

Eventually, I found some references to the serial port/driver being slow to come up at boot timer or after an upload. One solution was to use a while loop to wait until the serial system was active. That didn’t help, either. Finally, one suggestion was to literally wait for 5 seconds before executing the Serial.begin() function and that finally worked! It was kludgy and needed only for sanity checking while developing, but it worked. The two MAC addresses printed out.

Since I copied and pasted the information into my notepad file, I have no idea how one of those MAC addresses was wrong and more importantly, how a C got changed to a D. They are adjacent keys, but good grief.

It can easily be argued that fixing the USB driver thing definitely needed to be done, but because of that distraction and the slow start on the USB serial port connected to it, troubleshooting a friggin’ TYPO took two days.

ESP-HOW

In my last post, I went over some mile high view details of ESP-NOW and my plan to replace the largely analog control scheme for my target activator system with some microcontroller boards running ESP32 and ESP8266 chips. That these systems can provide wireless communications for far less than the cheapest alternative that I had found thus far. However, in making that jump, there are many other advantages, some of which feed from one another, but all rooting in using the MCU boards to control the system.

The control box primarily becomes just more control-y, able to leverage MCU features in the activator and trigger devices. The control box will have user friendly lights to indicate whether the activator has been reset and the trigger is ready. With an arming feature, it will be able to ignore trigger inputs during stage reset. With wireless triggers, there is no need to choose an input polarity. Once a later version is configured with a display, more sophisticated features, such as sequencing multiple activators from one trigger or battery charge level from remote devices.

The activator benefits in several ways, where using an MCU introduces cascading improvements. Immediately, I realized that a big problem will be that the activator will now need an internal battery. Since the MCU can take over the timer relay’s one function, that relay can come out, leaving more room for the MCU and a battery. I don’t need a connector for the wire, but I will need a power switch and the external antenna.

I was watching a YouTube video about multitasking MCUs. One of my favorite videos on the subject involved adding more things that were happening at the same time, first a blinking LED, then an LED that fades in, then a button that lights and LED and finally a servo that sweeps, with all of these running simultaneously. BTW, all of that revolves around programming the various delays needed without using the built in delay() function because the MCU can do nothing else while that particular delay is running.

Wait a second, ‘servo’? Epiphany! I can replace the 12 volt lock motor with a suitably strong servo; I don’t need to protect the lock motor if there is no lock motor to protect. Oh, and any servo will be a LOT smaller than the lock motor, so now I have more room for the battery. Oh, and the lock motor was the only reason I was going to need a 12 volt battery, so now I can use a smaller battery, like a 7.4 volt RC car battery, which are made to be easy to swap out and charge.

Hardly mentioned thus far is that the trigger devices can be remote and wireless. There is a good argument for keeping the hardwired connector for certain triggers that don’t really need to be automated. On the other hand, a wireless trigger appliance that hardwired triggers can plug into makes all triggers look alike to the controller software.

The short version (I know, I’m not good at telling the short version of anything) of implementing ESP-NOW is that there are quite a few serviceable example sketches showing how to set it up and get it working between almost any number of devices. The examples tend to show unidirectional commuications, with one or more transmitting devices paired with one or more receiving devices. At minimum in my application, I need for the control box to receive from a trigger device and send to an activator device. The control box really needs to be able to communicate in either direction. Taken a bit farther, an activator needs to be able to send it’s status and a trigger might need to be able to receive a command, so from the ground, I need to deploy them as bidirectional.

At this point, however, I am pretty happy to have unidirectional communications that is responsive. In the little video below, the window on the left is the ESP32-S3-Box set up a the sender. I have a button push set up to trigger it sending it’s data, in this case the number ’25’. On the right is the D1 Mini Pro set up to print any data it receives as soon as it receives it.

This video is not particularly easy to see the details, but I think you can tell that there is only a tiny delay between when the S3-Box senses the button and when the D1 Mini displays the received data.

Interestingly, the button press needs work. No matter how debounced the button is, it somehow sends the data twice per button closure and once upon button release. None of the logic in place *should* do that, but it does. I suspect it will be because the example code scans for all slave devices and puts them in an array. The button press triggers a loop which counts through the list of slaves and sends the data to each. I am guessing that something in that loop is to blame for the multiple sends. I don’t want to completely eliminate the loop because a near future version needs to e able to sequence through several activtors. Plus, my button press might not be perfectly well executed. Maybe it isn’t as debounced as it should be.

My first implementation of the wireless setup will actually have the units hard coded to eliminate such issues until I am ready to tackle them. I don’t need to be fighting on multiple fronts.

The activator software really doesn’t need to do much for a successful version 1.0. Receive the activate command, operate the servo as required to release the physical device, then sense whether the physical device has been reset or not.

Once I had really decided to go with the MCU route, the primary trigger device that I have been thinking about is a ESP-NOW equipped photobeam sensor. Like the activator, version 1.0 of the trigger doesn’t need to do just a gob of stuff, just send a signal to the controller when triggered. Everything after that is gravy.

There is one potential problem. The photobeam I *have* requires 12 volts and all the alternatives that work in the same basic way, with the emitter and detector in one device that points to a retroreflector on the other side of the detection area, also all start at 12 volts. This means that the trigger device will either have to have a different battery than the control box and activator, or I need to get 12 volts from somewhere.

There are boost voltage converters that use switching power supply technology to boost voltage. Of course, there is no free lunch, so a boost converter draws the same amount of power it converts. To supply 12V at 1A would take 1.6A from a 7.4V battery, plus a little to account for efficiency loss. Happily, the photobeam draws 40mA or less and, at this point, I’m not sure how much the D1 Mini Pro pulls, but I’d bet I can probably use one and a 7.4V battery and be just fine. I guess we will find out.

Longer term the the data protocol I develop should include the activator and trigger devices sending a periodical status packet. This will be used as a general keep alive communication, but the structure of status message will include a device ID that the user assigns, a bit or two to identify that type of device it is and other status information, like battery condition and whether the activator needs to be reset. I have 250 bytes to play with, but one or two bytes should suffice.

ESP Soon

Ok, *they* call it ESP-Now… or ESPNOW… or maybe it’s ESP-now…

Point is….

Espressif makes some chips now and then. Maybe a bunch of them. Chances are pretty good that if you have something in your house that does WiFi, especially if it is an IoT thing, it likely has an Espressif chip at the heart of it.

My gateway into ESP was the ESPHome platform in Home Assistant. I have a couple of ESP8266 based boards gathering and reporting information back to my Home Assistant. One is a pretty simple temperature sensor that reports the outside temperature, or at least the temperature *just* outside of the garage door. The other has two active inputs, a temperature sensor that tells the approximate ambient temperature around my water system, which consists of a a bunch of overcomplicated plumbing in my garage, and the water flow rate and total consumption for the house, based on what a flow sensor equipped water meter is willing to share. This unit also has two inputs that I have not yet connected that are detailed in the other blog post.

To the point, I am at least somewhat familiar with the ESP8266 based Wemos D1 Mini board. This is a 1 x 1.5 inch board with the surprisingly powerful ESP8266 microcontroller on board. ESPHome hides a lot of the ugly details from you, but the ESP8266 is a general purpose microcontroller with built in WiFi support and a handful of general purpose IO pins. It is essentially a single core version of the ESP32 with a little less I/O and a (only) 80MHz clock.

Remember ESP-NOW? This is a post about ESP-NOW….

ESP-NOW is, to paraphrase the documentation somewhat, a hijack of the typical WiFi TCP/IP stack. ESP-NOW uses the hardware of the MCU’s WiFi facilities, but at a not the whole stack. It lives and communicates at layer 2 of the OSI model. It is a peer to peer protocol, with no need of a WiFi router, DHCP or any of that sort of thing.

This protocol leverages the ‘action frames’ element of the 802.11 standard. This subprotocol is generic, but often networking gear of any given brand uses this protocol to communicate between themselves. For example, action frames are how access points in the same SSID pass your roaming device between different access points, with vendor specific data passed as action frames.

This protocol comes with some limitations, such as a limit of 250 bytes per packet. For most such purposes, 250 bytes is way more than needed most of the time. I need to pass only one or two bytes as my payload.

If you want to skip a bunch of background and exposition, you can scroll down to the big Espressif logo.

I was killing the usual time on YouTube when, based I’m certain on browsing habits that include ESP8266 stuff, they presented a couple of ESP-NOW basic configuration guides. My application has been begging for a wireless connectivity method and here it is, dropped in my lap and almost perfect for my needs.

Without getting too detailed, I am building a system which needs a controller that can see an initiating action and trigger the activation of some hardware. Specifically, I am a competitive pistol shooter. The main sport I am involved with includes scenarios wherein you may have to address targets that are moving or may have non-threat targets moving in front of them. Historically, these moving targets have been triggered by simple mechanical means, such as stepping on something that will trigger a spring loaded device to yank a cord/cable in order to activate the moving target. At this point, I have a working and reliable system that operates electrically. It has been used at several matches for a little over 300 activations, with no mechanical or electrical failures. So long as it is reset between stages, it just works.

I have build a version 2.0 of the same device, with almost identical mechanics, but with a four conductor connectors and cabling for reasons detailed below.

This unit has only been to one match, but except for the occasional failure of the humans to reset it, it performed perfectly.

The issue is, of course, everyone wants just a little more out of it, including me. I mentioned “So long as it is reset between stages…” and that is a fairly common issue. Failure of stage equipment to activate results in a mandatory reshoot for that competitor, which costs everybody time.

For example, a given competitor decides to be the one who resets the device. When that competitor is coming up in the rotation, generally for at least two competitors before him, he is not actively resetting the stage. If nobody notices at first, the activator doesn’t get reset.

When the next competitor goes, whatever is supposed to trigger the activator doesn’t seem to do anything, so that competitor is commanded to stop and reload to make a new attempt. Once this kind of thing is happening, it tends to snowball into two or three competitors in a row having some kind of problem and the root cause is that people didn’t notice that the activator was not reset.

Another, though lesser, issue is when resetting the stage and someone *has* reset the activator, but someone accidentally walks through the triggering area and prematurely sets off the moving target.

Let’s list our grievances…

- Nobody notices that the activator has not been reset.

- It is clumsy or impossible to guard certain kinds of triggers from activating while the stage is being scored and reset.

- I haven’t mentioned yet that everyone wants this to be wireless, too

- Ultimately, it would be great if the activator could reset itself.

I added the extra wiring to the activator with the intent to have a switch inside the unit that is closed whenever the unit has been tripped and thus turn on a big red light at the controller whenever it needs to be reset. While I haven’t actually figured out the best switch arrangement yet, the idea is otherwise fairly trivial to accomplish.

Triggering the activator wirelessly is something I have experimented with. The best analog solution I found for that is a wireless system intended to operate a gate from a remote button. The receiver is relatively small and can be powered by 12V. The remote is a typical handheld door opener button, but would be pretty easy to modify to attach to the control box as it currently operates. The bad news is that this wireless set is about the same cost as my build cost for an entire system, essentially doubling what I would need to charge for it in a finished product.

However, and I’m sure the reader is tired of all this exposition by this point, I do have a solution.

For reliability reasons, I did not first pursue microcontroller automation of this system. The more that can go wrong, the more you have to work to prevent it. I wanted the physical hardware to be solid, but I have always known that it could be more feature rich under MCU control. I have seen a couple of activators controlled by a Raspberry Pi. I love my RPi for all the things it is good at. In my opinion, they are extreme mass overkill for controlling small things, primarily because they are general purpose computers, running an operating system that is really designed for human interaction. In the match where a device was operated by a Pi, the stage it was on was almost thrown out due to reliability issues. The comparitive simplicity of an MCU board removes a lot of potential issues arrising from a more complicated controller.

On the other hand, I have seen quite a few stages, particularly at the national level, with activators controlled by actual industrial control hardware. They are designed specifically for control of industrial equipment where tolerance for failure is minimal. They are priced accordingly. When I started looking at making my device, a suitable industrial controller started at about $300, which is about how much I’d like my entire device to sell for.

My analog system is very reliable; it’s just switches and relays, with almost nothing to break, but adding or changing features requires literally rewiring it. An industrial controller would also be reliable and much more flexible, but is notoriously expensive. The MCU occupies the space between them.

I can replace a lot of relatively inflexible hardwired relays and switches with an MCU and thanks to Espressif’s ESP-NOW protocol, they can communicate wirelessly. It took several days of working through some details, but the decision had enabled several other decisions and almost all of them result in savings of time and cost.

For example, the activator itself is fairly simple mechcanically. There is a car door lock motor that, when powered up by the current control box, it simply pulls on a metal sear, releasing a spring loaded rod that supplies the yank required to activate almost every imaginable moving target. This lock motor has one troublesome characteristic, namely that leaving power applied to it will eventually damage the little motor inside of it. To mitigate this, I use a timer relay. The timer relay is powered by the same activation power from the control box, however it is set to throw it’s contacts after about a half second and those contacts are wired to remove power from the lock motor to protect it. When power is removed, the relay resets. Ironically, this timer relay is the single most expensive part of the activator, including the steel box and frame, and for the MCU board I will be using, I could buy four of them for the cost of one timer relay.

The current control box is quite literally two relays, a switch and a battery. One relay is just to buffer the input trigger so that the full power going to the acivator doesn’t have to flow through the triggering device, also most of the triggers in current use could probably handle it.

The other relay is to invert the trigger input. For example, the ‘dead man switch’ trigger is a hand held switch that the competitor holds and presses to ‘arm’ the system. When they release the button, it triggers the activator. In the sport rules, you are limited in what you can *require* a competitor to do once the timer has been started, but before the timer, you can require them to hold something, for example.

The switch is a three position switch to select whether the input is inverted or not, with the center position used to disable the system.

The battery powers it all. I chose a 12 volt lithium ion battery pack that has a power switch and a barrel connector. In my original testing of the lock mechanism, I used a second timer relay configured in pulse generator mode, set to trip once every 30 seconds and wired at a trigger to the system. Once that was running, I left it on the workbench overnight. The next morning, about 6 hours later, it was still running. In 6 hours, the relay (both relays, technically) and lock motor had cycled about 720 times. The battery was between 40% and 60% charged. This is far and away long enough for a long day at a major match. During the entire time of this protoyping project, I have recharged that battery about 5 times and never was it completely flat when I started charging it.

The latest update to the control box just upgraded the connectors to 4 pin connectors and added power to the trigger input so that the control box could also power the photobeam trigger.

By changing these switches and relays out to a couple of MCUs, I will risk added complexity, particular as I need to write the software for them, but there is a LOT to be gained. The big ones are wireless communications, easier to add sophisicated features and overall lower build cost.

There are GOBS of companies making MCU boards and many of them are clones of each other. The specific controller I have chosen for the activator is commonly called the D1 Mini Pro. I have used the D1 Mini clone in my home automation pursuits. The primary difference between the standard and Pro variant is that the Pro offers an external antenna connector, a requirement for a device that needs to communicate wirelessly but still be enclosed in a steel box. For the immediate upgrad needs, the control box could also use this same board, but for the long term, the control box will probably have something with a display for eventual features I hope to add. I’m starting off with an Esperrif ESP32-S3-Box because it has a display and a couple of buttons on the front of it and I already had it, purchased for some home automation pursuits.

This post is already too long, so I am going to cover my development, implementation and more detailed design philosophy in the next one.

Let It Slide

My older brother did a fair bit of high school math and science work assisted by a slide rule. I was too young at the time to understand the nuances of this remarkable tool. By the time I was in high school, the pocket calculator was the norm, if any calculation assistance was allowed at all. I recall my chemistry teacher encouraged us to use a calculator in his class because “this is hard enough without messing up the arithmetic”.

Between an undetermined number of calculators of various complexity and more recently, the entire internet full of specific purpose calculators, I never had cause to acquire or learn about the slide rule. I am at a point in my life (an age?) where such mechanical devices are fascinating.

A couple of years ago, I was watching something on YouTube and the subject of sliderules came up. Shown in that video was a sliderule configuration that I found quite compelling, the circular slide rule. Specifically, the Russian KL-1. Turns out they are not stupid expensive and a bit of eBay shopping revealed one from the early 70’s for a price I was willing to pay.

A slide rule is, at it’s most basic, a device inscribed with markings spaced according to a logarithmic scale. Logarithms are their own subject and there is much more to it than this, but the layman can think of logaritms as a numeric series where each next major number is a multiple of the previous, such as 1, 10, 100, 1000, etc where each mark is 10 times the previous mark.

We use computers to calculate logarithms these days, but hundreds of years ago, math and science practicioners generated books full of logarithms so that they could be looked up in a table rather than calculated each time it was needed. John Napier, a Scottish polymath, is credited with introducing the use of logarithms to simplify other calculations. In 1614. So, not yesterday.

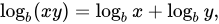

One of the most helpful properties of logarithms is that the logs of any two numbers added together will equal the log of those same two numbers multiplied together.

This has the effect of simplifying multiplication problems down to addition, though you need some way to know the logarithms.

For our purposes, b is 10, so we are working with base 10 logarithms.

The log of x plus the log of y equals the log of x times y.

For simplicity, lets use 2 and 3 for x and y.

Log(2) + Log(3) = Log(6)

Now, Log(2) = 0.30102999 and Log(3) = 0.47712125. Those added together is 0.77815125. Since we are using base 10, 10 to the power of 0.77815125 turns out to be….. 6!

The scales on our slide rule gives us an easy way to add those two scary small numbers because someone else marked the scale in logarithmic intervals, forming a lookup table of sorts.

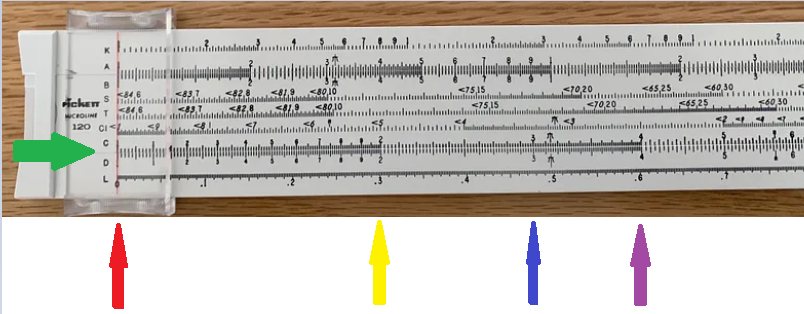

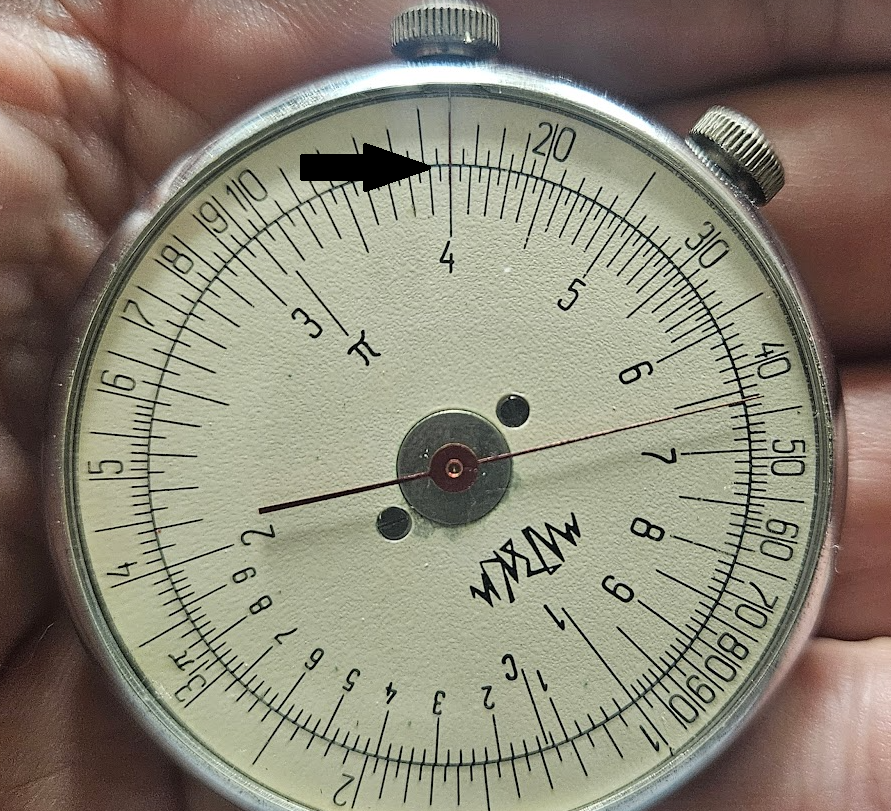

For the moment, let’s focus on the C and D scales, shown by the green arrow. These are identical log base 10 scales. Logarithms for numbers less than 1 are negative numbers, so the scale starts at 1, shown by the red arrow. 2 is shown by the yellow arrow, 3 by blue and 4 by purple.

Next, lets say that our scale is some arbitrary number of units long. It doesn’t matter what units unless you are actually making a slide rule, which I want to do now. 🙂 As luck would have it, the slide rule in this picture has the L scale, which shows the actual numeric value of of the log shown in scales C and D. The L scale is linear, beginning at 0 and proceeding evenly to the right. For our purposes, we can consider the L scale to be a ruler.

Log 2 is 0.30102999. The distance from the red arrow to the yellow arrow on the L scale is 0.3 or so.

Log 3 is 0.47712125. The distance from the red arrow to the blue arrow is 0.47 or so.

Log 4 is 0.60205999. The distance from the red arrow to the purple arrow is 0.6 or so.

By itself, that seems maybe obvious. But what this means is that we can represent the logs of our various numbers by the physical distance they are from 1.

2 x 3 = 6

log(2) + log (3) = ?

0.30102999 + 0.47712125 = 0.77815125

Find 0.77815125 on the L scale (no arrow shown) and it is [drumroll] …. 6! 6ish, anyway, because we are really only able to see 0.775ish on the scale.

This reveals what people accustomed to the instant precision of electronic calculators may find challenging about slide rules. In practiced hands, they are very fast, but not super precise. However, they give an answer that is almost always going to be close enough for most purposes. If someone needs to figure out how many degrees 1/7th of a circle is so they can cut a wooden circle into 7 pieces, 360 / 7 = a hair less thatn 51.5 is close enough for a saw.

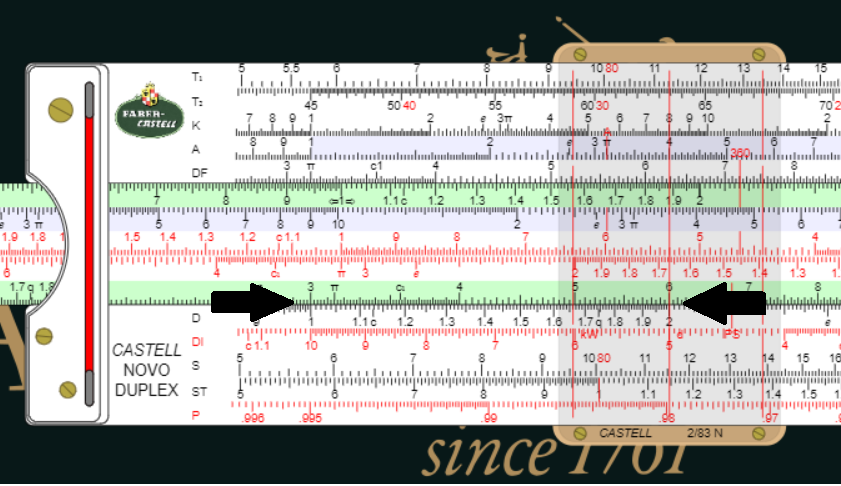

Multiplication on the sliderule shown is accomplished by sliding the inner movable scale until the multiplier on C scale lines up with the 1 on the D scale, then move the cursor (the clear slide with the line across it) to the multiplicant on the D scale, then read the result on the C scale.

I don’t have a classic slide rule, but I found a wonderful site with slide rule simulations.

The arrow on the left shows the multiplier 3 lined up with 1 on the D scale. The arrow on the right shows the cursor lined up with the multiplicant 2 on the D scale and the answer 6 revealed on the C scale.

Doing larger multidigit numbers can be done with fractional distances. 30 x 20 = 600 for example. Using 3.0 (times 10 in your head) and 2.0 (times 10 in your head) = 6.0 (times 100 in your head; 10 x 10). If you need large numbers with high precision, just use paper to do long form multiplication and use the slide rule to do the arithmetic in each step.

33 x 21 = 690-something-between-0-and-5. It’s 693 precisely.

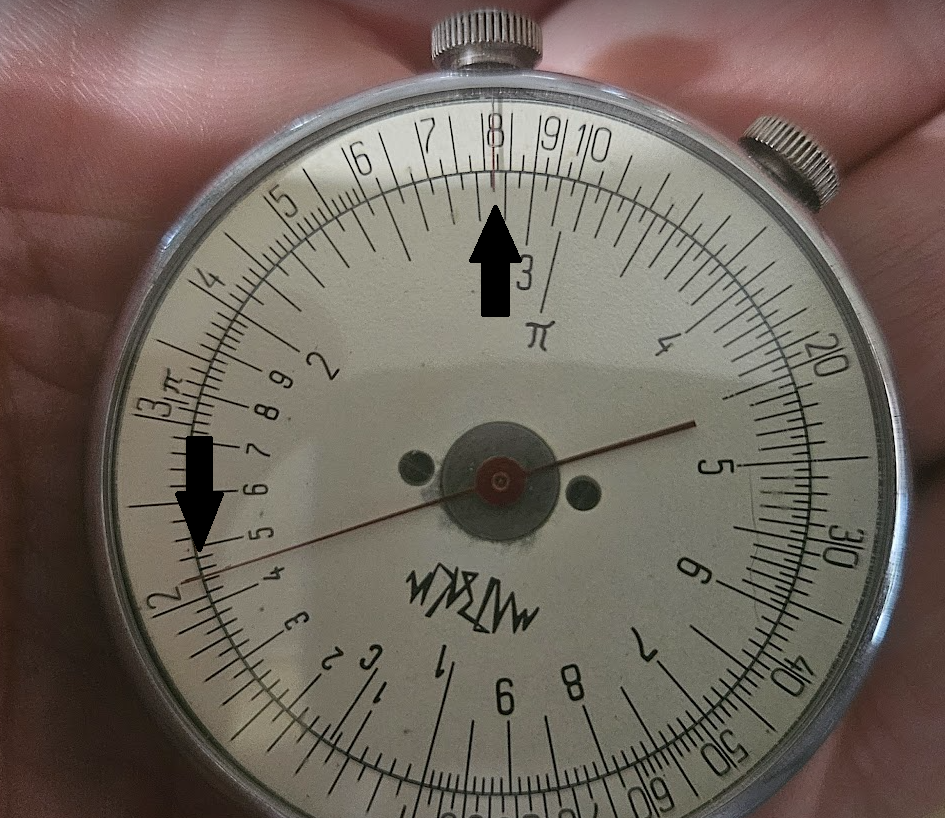

Nothing says that the scales need to engraved on something straight. Enter the KL-1.

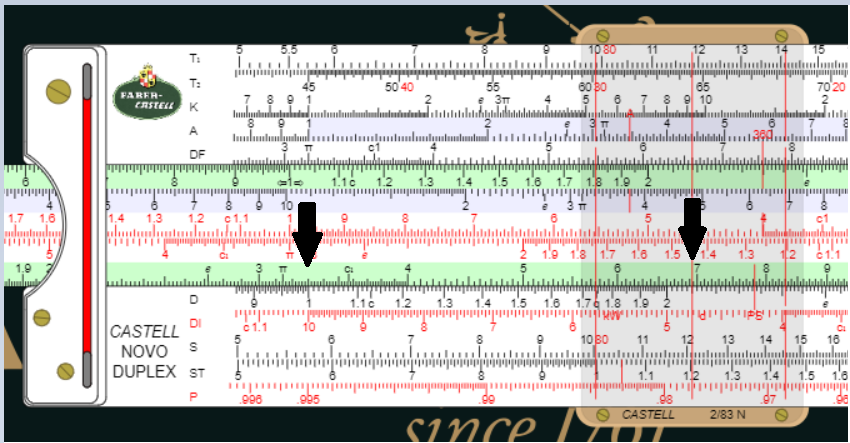

There are many other circular slide rules and even other models that emulate a pocket watch like this one does. In this case, this side has what is essentially the C/D scale printed on a movable face and two cursors. One cursor is fixed, here aligned with the 1 and the knob which turns the face. The other cursor is the red hand, which is moved with the other knob.

The procedure is slightly different, but still works under the exact same principles. The outer scale is essentially A scale in typical slide rule parlance. Turn the face until the multiplier aligns with the fixed cursor, 3.9 or 39 in this case.

Note that the inner scale is labeled C and the written instructions say it is to be used for basical calculation. If I use the inner scale, the multiplication math still works, but the outer scale has more divisions and is thus is more precise. The inner scale become more important used in division and square root calculations, covered a little later. The A and C scales correlate. The A scale numbers are the squares of the C scale numbers. I have NO understanding how this helps with division. I have much to learn.

The hand is rotated to 1 on the scale. These two steps are equivalent to sliding the scale to line up the mulipler on the C scale with 1 on the D scale.

Then the scale is rotated until the multiplicant, 2.1 or 21 here, is aligned with the red hand. The result is read at the fixed cursor, a hair less than 8.25 or 825. The precise answer is 8.19 or 819

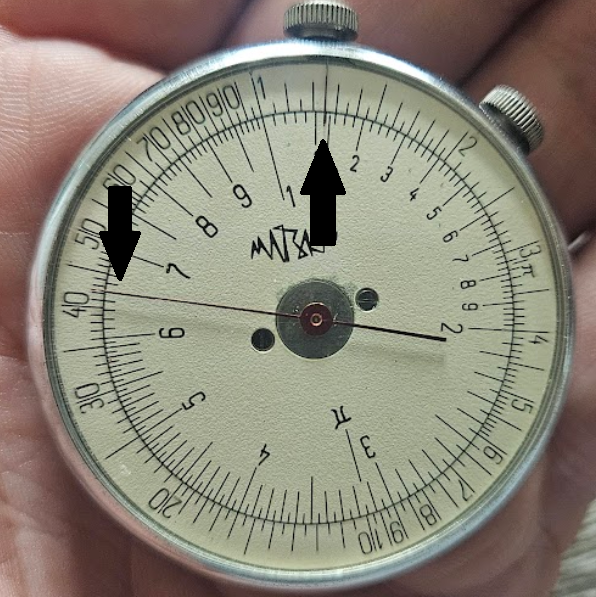

The other side of the KL-1. Interestingly, written instructions call this side 1. It has three scales and another red hand cursor. The scales don’t move, but the hand does. The outer scale is the inverse of the inner C scale on side 2 and is called the DI scale. The other two are the S and T scales, used for sine and tangent trigonometry functions that I don’t yet know how to use. Note that the T scale is a 630 degree spiral. I have much to learn.

Division is similar in that Log (x) minus Log (y) = Log (x/y). The procedure for division is similar to multiplication.

Rotate the outer scale to put the dividend under the fixed cursor, 16 in this example.

Rotate the red cursor to the divisor on the inner scale, 2 in this case.

Rotate the inner scale to put the dividend, 16, under the red cursor. The quotient result, 8, is read on the inner scale under the fixed cursor.

125 / 65 = ?

Put 125 in the outer scale under the fixed cursor and 65 in the inner scale under the red cursor.

Rotate the scale to put the outer scale 125 under the red cursor and read the quotient from the inner scale under the fixed cursor. 125 / 65 = 1.92. The precise answer is 1.92307692, but I think 1.92 is definitely close enough.

The markings on the inner scale show greater precision (0.12 per division) for values between 1 and 2, slightly less from 2 to 6 (0.25 per division) and even less from 6 to 10 (0.5 per division). This might be due to the range of expected results. The closer to 1, the more precision needed. However, I think it is really a horological artifact. The divisions are physically similar in size, but the logarithmic intervals are progressively smaller as the values go up. The lines are all about the same size in degrees of arc, but this is simply room for more divisions between 1 and 2 than there is between 6 and 10.

The Trouble With Island Life

When last we left our heroes, Home Assistant was running via SD card on a Raspberry Pi 4 B with 4GB RAM. Why was that not OK?

Well, the biggest thing is that SD cards have a finite life and as the primary hard drive for a continuously running system, it is subject to significant writing action over time. The operating system does stuff, Home Assistant does stuff, both of them log activities, etc. Some SD cards are optimized for longer write time, but in short, SD cards can actually wear out.

The SD card I used to install Home Assistant on the Raspberry Pi was not new, but probably has plenty of life left. Still, I’d rather use a drive intended to be a hard drive.

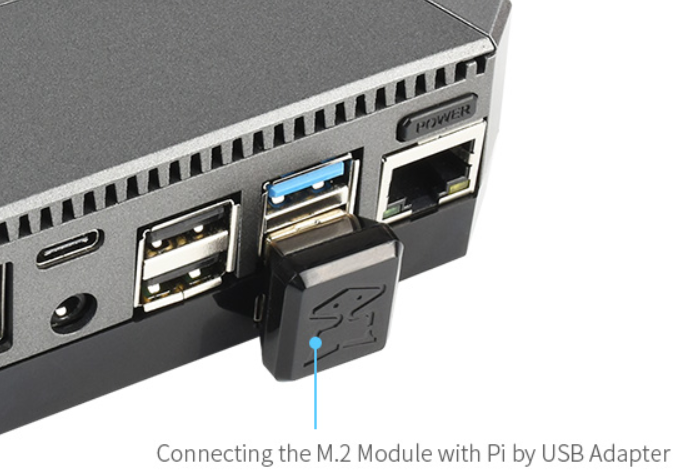

Running a Raspberry Pi on an SSD drive is pretty simple, though with the Pi 4, we are limited to USB adapters. I didn’t want to have such a critical component hanging on the end of a plug in cord, so I was happy to see a recommendation for the Argon One case for the Pi4. Specifically, I ordered the case equipped with an M.2 SATA adapter, along with a relatively unknown brand 64GB M.2 SSD.

The Argon One case is pretty nice in several ways, but the cool part here is that the basic case holds the Pi 4 in the top of the case and bottom cover is interchangable for a plain bottom or a bottom equipped with an M.2 SATA adapter. It uses a clever U shaped plug to connect the adapter to the bottom USB 3.0 jack on the board.

The Argon One case adapts the micro HDMI on the Pi4 to standard HDMI, if that matters to ya. In my application, I don’t need it, but if I use a Pi for something else, that will no doubt be handy. It also adds an actual power switch and still gives access to all the I/O pins.

Since I was upgrading anyway, I checked stock at Adafruit and found that they had 8GB Raspberry Pi 4 boards in stock, so I got one of those on the way as well. May as well do all the upgrading at once and be done with it for a while.

The process for setting up a Pi4 to boot on SSD is pretty straight forward. First, I used my USB to M2 adapter to put a Home Assistant image on the 64GB SSD, the image provided by Raspberry Pi Imager. Then in the utilities section, there is an image to put onto an SD card that sets the boot order in the Pi to try SSD first, which I burned to a handy SD card I had laying around. I booted on that SD according to the directions, then booted on the SSD in my USB adapter and it came up. I did not do all the setup as I wanted to wrap everything up in “permanent” fashion in the new case first.

It was at that point that I discovered that my 64GB SSD would not quit fit the socket in the case adapter. Much trial and error and even a little forcing revealed that, although it was not mentioned as so on the ordering description, the 64GB SSD was apparently an NVMe SSD and the case adapter specifically is not NVMe compatible. Not only was the non-NVMe drive a little cheaper, all indications were that NVMe speeds were wasted on Raspberry Pi hardware. None of that would help me right then.

I also found that M.2 SSDs that are verifiably not NVMe are really hard to find. Apparently nobody is looking for slower stuff anymore. If I found one at all, shipping times were long. I decided that the best bet was to look back to Argon One and get their upgraded NMVe base.

It took a couple of days to arrive and a few more to reach a point when I could dedicate some time to work on it. Everything looked ready to go, but the 64GB SSD would not boot up. As part of verifying it, I put it back in the USB adapter and put it on my PC. The PC would show the adapter plugged in, but as a drive with 0 bytes. Long story short, after several attempts at recovery, I concluded that somewhere in the previous attempts to use it, perhaps in the steps involving force, I must have damaged it. I gave up, drove to WalMart and got a Western Digital 500GB NVMe for less than $50. I think it may have been $34, but I don’t remember for sure.

Back in the late 1990’s, I was in the San Francisco area for work. We went to the local Fry’s electronics and found they had a fantastic deal on 1GB SCSI hard drives for about $300. It was still a lot of money, but imagine what I could do with a GIGABYTE of hard disk space?!?!? Try to find something that small now. Not even at WalMart.

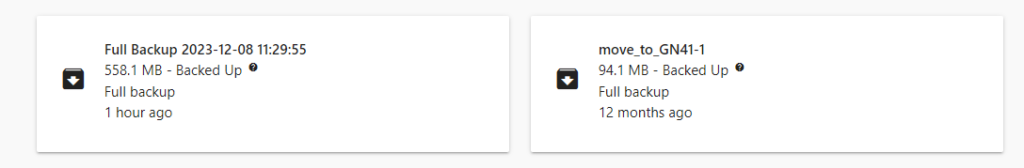

Anyway, skip back to the part where I put the images on the SSD and from there forward, everything worked perfectly, including backing up the “old” 4G system and restoring it on to the new system. My Home Assistant is now running on a Raspberry Pi 4 with 8GB RAM and a 500GB SSD with 458GB free space.

The new case provided two big improvements. First, while i liked the heat sink wrap ‘case’ I had it in before, the Argon One case does look better, especially to my primary customer. Second, the fans on the heatsink case were iffy on day one and one of them had already just quit, so it ran hotter than it needed to. The system is inside a closed cabinet with a 16 port PoE switch and two NAS units, so it’s a little warm in there already.

The big improvement, however, is speed. Particularly if a process involves reloading or rebooting, the SSD is significantly faster than the SD card. Updates take far less time than they used to, which is important since there are so many of them, especially at the beginning of every month 🙂

If I were to pick one disadvantage to this new setup versus running Home Assistant as a virtual machine on the NAS, it’s that the VM method gave me the snapshot tool. With it, I could completely hose the system and have a snapshot to restore from, either the automatic weekly snapshot or if I thought I was going to break something, a specific ‘before’ snapshot. There isn’t really a substitute for that particular freedom with the current setup.

Moving Off The Farm

No, not me. Home Assistant, moving off the server farm. The tiny tiny server farm.