Since about 2012 or so, I have been working on some home automation, mostly using Vera (which has changed names again, kinda) and a handful of Z-Wave enabled switches. It has never really taken off like it could have at our house, but I do have a few bits in place that we have grown to appreciate, especially once I deployed a few Amazon Echo devices and we could ask Alexa to turn some stuff on and off for us.

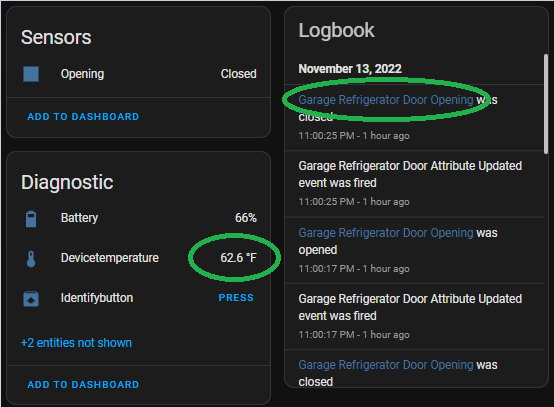

The three things that see use multiple times every day are plug modules that run lights and a ceiling fan in our back yard, a smart bulb in a bedroom lamp and the thermostat on the house HVAC system. Less often, but still seeing occasional use are two wall switches running a driveway light and front porch light.

I do have a smart plug strip that sometimes sees seasonal use for Christmas decorations and a generic plug module that right now is running the pump for an above ground pool, but I’ve used it for other short term uses as well.

As explained in a very old post, the DSL internet we had when we moved out here was not reliable enough for a service that was 100% dependent on cloud connectivity. MiCasaVerde’s “VeraLite” product had cloud connectivity to allow one to control it from elsewhere, but the actual control is local – not dependent on internet connectivity to work at all – so it seemed a good choice.

During the decade since then, I would occasionally add a device, but what was usually the biggest limitation was a less than perfectly reliable Zwave network that needed occasional rebuilding until I installed the version of Vera controller I have in place currently.

Zwave also had some difficulties with the size of our house. Ironically, if I had replaced every switch in the house with Zwave switches, the mesh networking protocol probably would have been much more robust, but with two wall switches side by side and one thermostat 30 feet from them, there probably just aren’t enough nodes for the redundancy.

Once we had linked Alexa and had grown to “depend” on controlling the thermostat verbally, having to reconnect it to the network every month or two became tedious. The then-new replacement Vera controller had Zwave and Zigbee and more memory, so I upgraded it and got a new thermostat that connected via Zigbee. It looked nicer and was much more reliable, needing to be reconnected to the network only two or three times a year. Afterall, the thermostat was the only Zigbee device in the house, so it there was a mesh network consisting of exactly two devices.

One time when I had great difficulty getting the thermostat to reconnect, I reached the conclusion that either the radio in it or in Vera had gone bad and as the only two Zigbee devices in the house, I elected to change the thermostat again, this time going to a WiFi device. Unfortunately, and as is almost inescapable anymore, it is a cloud dependent device, at least as far as remote control of it goes. Sigh…

Now that the thermostat is WiFi , the only Zwave devices left are the two wall switches that run the porch light and driveway light. Those are thus also the only remaining need for Vera. Everything else can be, and largely is, controlled by some other means.

Not locally, I might add.

I was at a crossroads.

Do I keep adding piecemeal to the mixed tech automation I have now? Alexa *kinda* ties it together, but only because Alexa has various skills that talk to Smart Things (my WiFi switches) and Geeni (smart bulbs) and Honeywell Home (thermostat) and Vera (Z-Wave switches). They all work slightly differently and none have any real automation other than basically on or off or maybe some simple scheduling.

Do I drop Vera and replace those only two switches it still directly controls and go with all WiFi/cloud automation or do I really do the hard think and get completely away from the cloud, which would probably mean changing my thermostat *again* and implementing a local controller?

Sometimes, it takes a while for me to write a blog post; day job an’ all. When I wrote those last two paragraphs a couple of weeks ago, I must admit that I was already elbows deep in replacing Vera with Home Assistant. Vera is already unplugged. However, at that time, I was still planning to move Z-Wave over and even expand Z-Wave devices in the house. For one thing, I still have a box full of undeployed Z-Wave devices that I purchased over the years. Also, Z-Wave power switching devices seem to be more likely to include power monitoring features. Not exclusively, but Zigbee devices just seem to include power monitoring way less often.

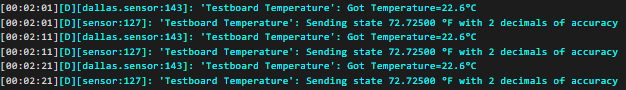

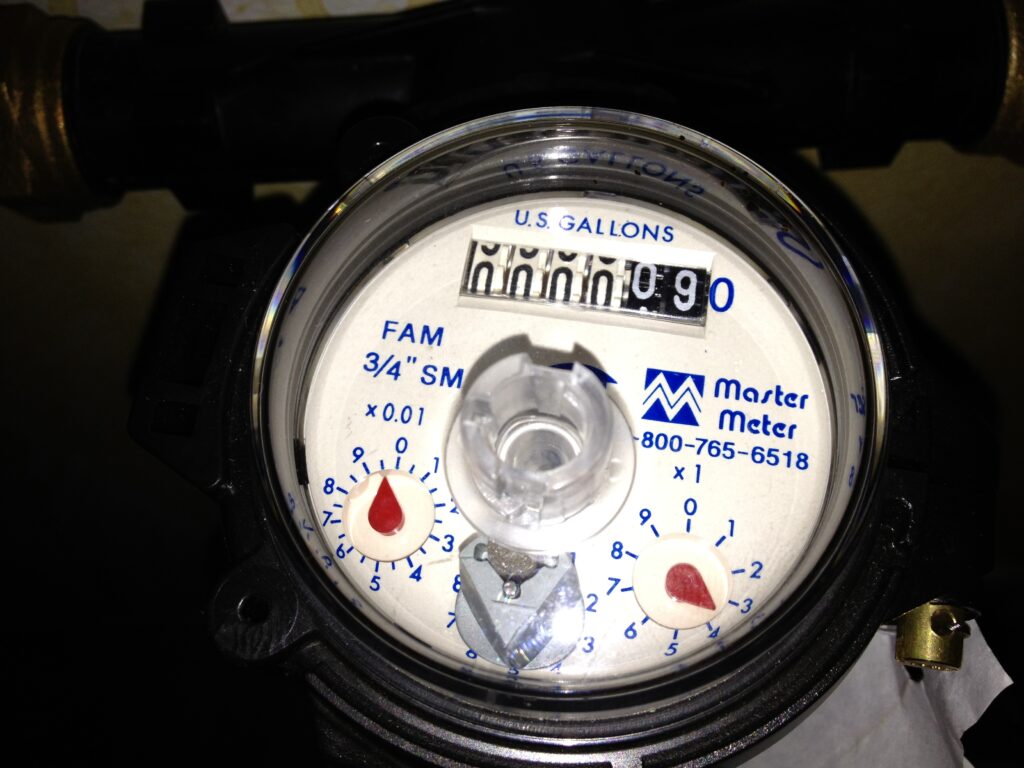

Power monitoring for the sake of power monitoring is not my goal for most of these devices, but for two or three of my applications, I use power monitoring to verify that the device is indeed operating. I use two heatlamps to mitigate freezing of some water system components and a tank heater and recirculating pump for the horses’ water trough. If they are switched on, but *not* drawing power, they need some kind of attention. The Z-Wave smart plug devices I have used in the past have power monitoring, but Vera didn’t really give me any way to leverage that into some kind of action item if it were to suddenly go away.

I’ll stop here to describe my Home Assitant path thus far. I was made aware of it by a friend while we shared a ride to and from Colorado for a firearm competition event. The advent of regaining local control is what really sold me on it, but between the demise of MiCasaVerde (the original company controlling the Vera line of controllers) and the new Ezlo company that has largely taken over that space with what may be limited support of the older hardware and software, I prefer to go in the open source direction. Yeah, I won’t have a customer service rep to yell at, but with most big well supported open source projects, you don’t need to. Somebody somewhere has probably already had your problem and fixed it. You just need to be good at Google to find it.

Home Assistant is usually deployed on a Raspberry Pi, but it is certainly not limited to that particular hardware. At the time I have gotten interested, Raspberry Pi 4 boards are in high demand and rarely in stock anywhere for more than a few hours at most. The only Pi boards I have on hand are probably Pi 2 at best, which is not enough Pi for Home Assistant. While other hardware options might work as well or maybe even better, I chose first to try using a Docker container hosted on my Synology DS220+ NAS since it is not really worked very hard and has plenty of leftover CPU power.

It came up quickly. Almost immediately, I wanted to add HACS, the Home Assistant Community Store, for an add-on to interface with my Emporia Vue account (yet another cloud based service… sigh). Installing HACS in the container required being able to run wget from the console, which was not installed in that image, which itself took a bit to get to, so I picked up a couple of (soon to be forgotten again, I’m sure) skills there.

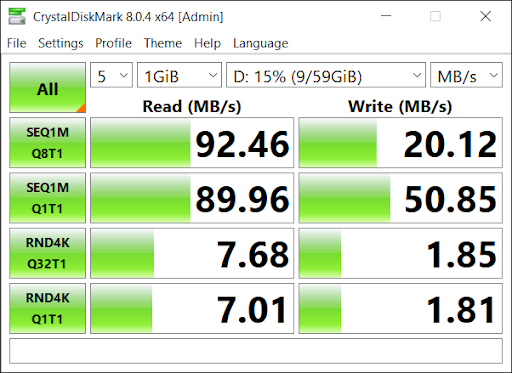

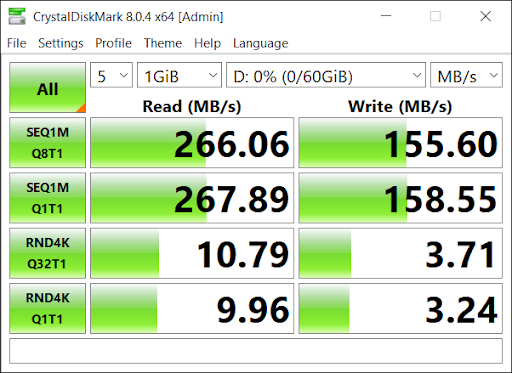

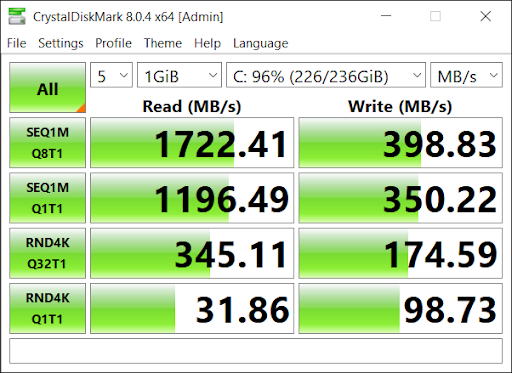

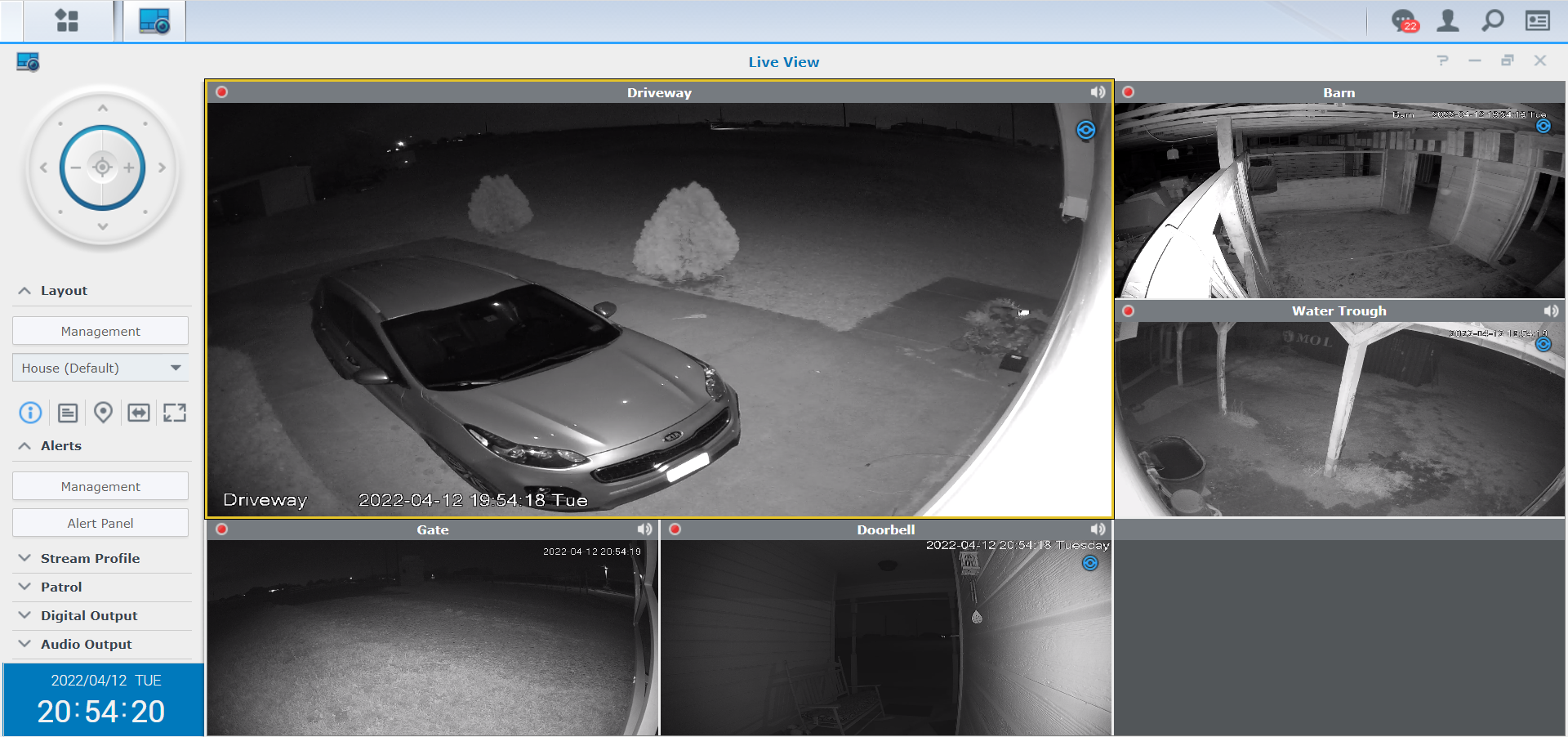

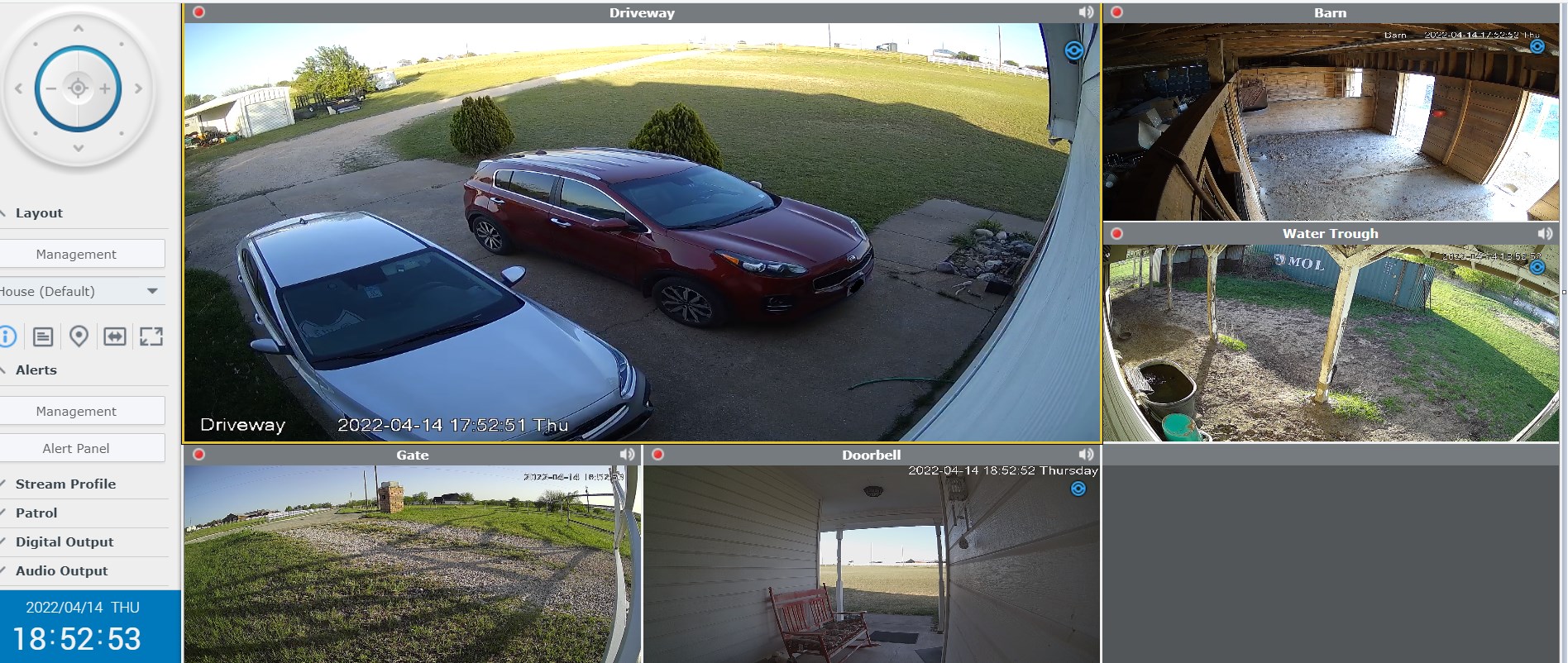

I ran up against other container version limitations pretty quickly, particularly when it came to adding drivers for anything I might need to plug in, like Z-Wave or Zigbee bridges, so after about 2 days, I elected to upgrade to a VM version, still hosted on the NAS. This is with it recording five cameras and running the VM:

This has worked out much better. It’s running Alpine Linux, a lightweight distro. You would almost never need to even know that unless you royally screw something up. Don’t ask me how I know.

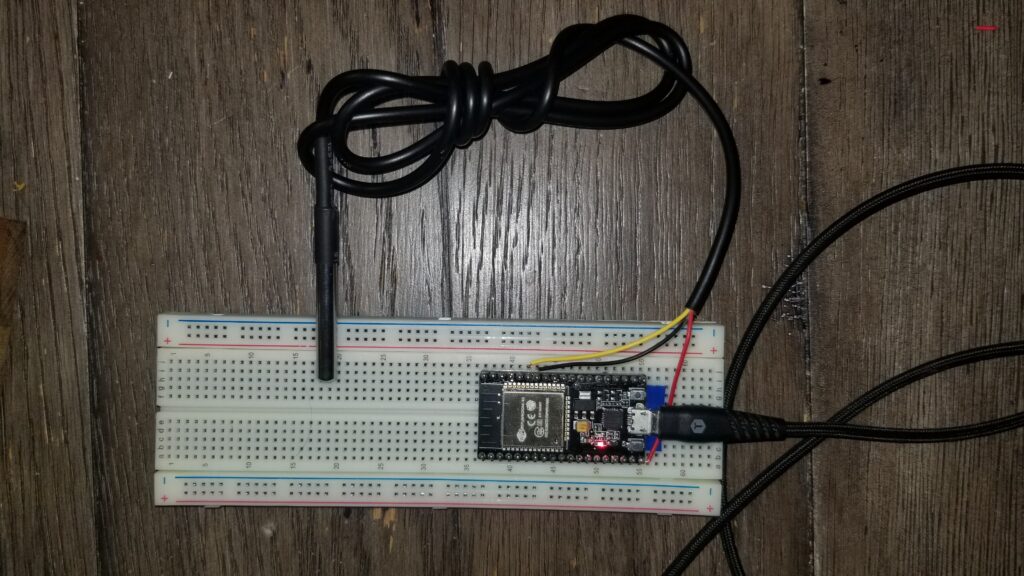

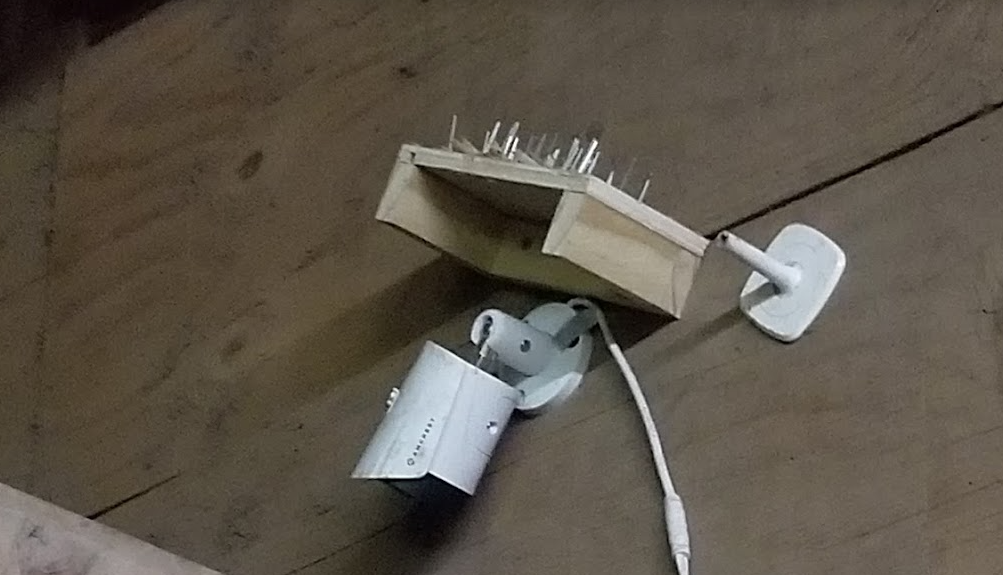

I acquired a Sonoff Zigbee controller and an Aeotec Z-Stick 7, both from general recommendations gathered from various Home Assistant YouTube videos and generall Googlage. These are connected to the Synology NAS via a USB 3.0 hub and they are powered by the NAS.

There was one concern with the Aeotech dongle regarding a required firmware update. The process for that is convoluted, but not particularly difficult. Just a lot of steps. Basically, you download a set of tools and a firmware image file from the chipset manufacturer, Silicon Labs. This requires, of course, a free developer account with Silicon Labs. Once the pieces are in place, it’s a 3 minute job. Curiously, once done, if you try to do it again, it gives you a scary error and aborts, but apparently this just means it has already been done. It is, afterall, a tool for developers, not end users. There are tools there that would also help me later verify that I did not indeed fry my Z-Stick. I’ll get to it…. 🙂

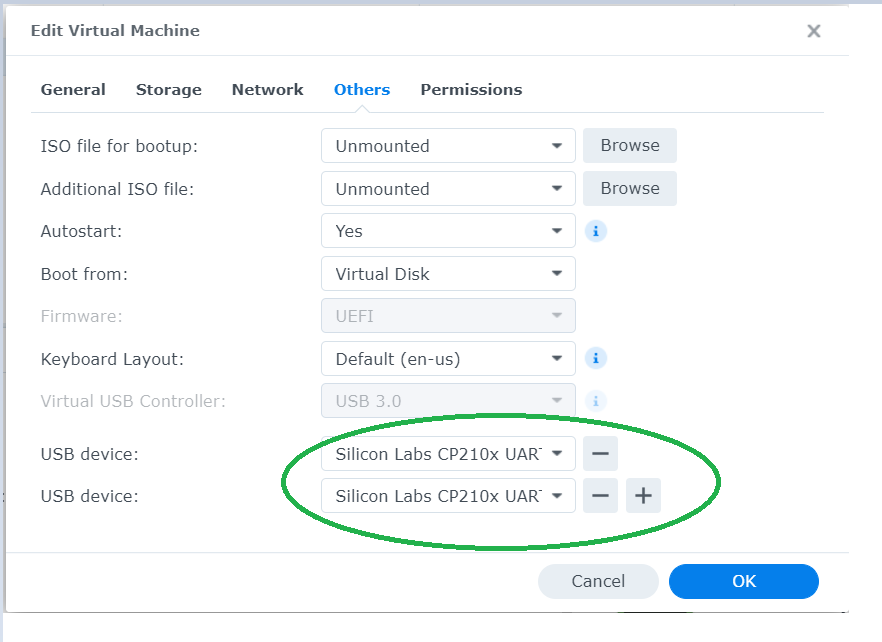

Hardware-wise, the VM passes USB devices through to the guest OS when configured to do so.

As luck would have it, both Sonoff and Aeotec use the same family of Silicon Labs USB UART chips, which would eventually cause some confusion, but lets avoid that story as long as possible. We will continue to pretend that I was never an idiot and that Zigbee came up on ttyUSB0 and Z-Wave on ttyUSB1 and that all the stuff worked fine!

Actually, Zigbee never gave me an ounce of trouble. Well, maybe an ounce because of the geography of our place and where I want devices.

Some of the things I want to control and automate are in our barn/workshop, which is, at minimum, about 80 feet from the house. Realistically, the path from the Zigbee controller to the light switch in the workshop is more like 125 feet.

Zigbee allows exactly one controller in the network. Everything else is an endpoint or a router/endpoint. This means that to reach to my workshop, which incidentally has awesome ethernet connectivity, I must find a way to add Zigbee devices to bridge an RF route out there, completely ignoring that existing robust and reliable communication path. Remember how I mentioned that with open source, someone has usually already solved your problem? Apparently not this problem.

On the other hand, Zigbee, being a mesh network protocol, does work pretty well if you throw enough devices at it and place them cleverly.

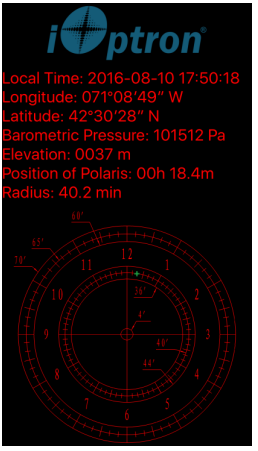

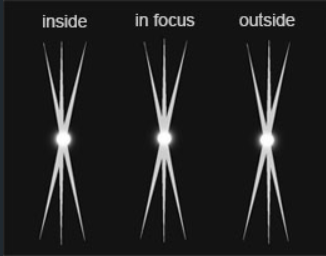

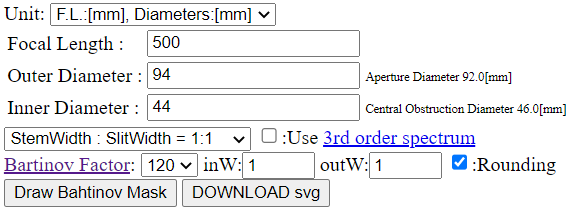

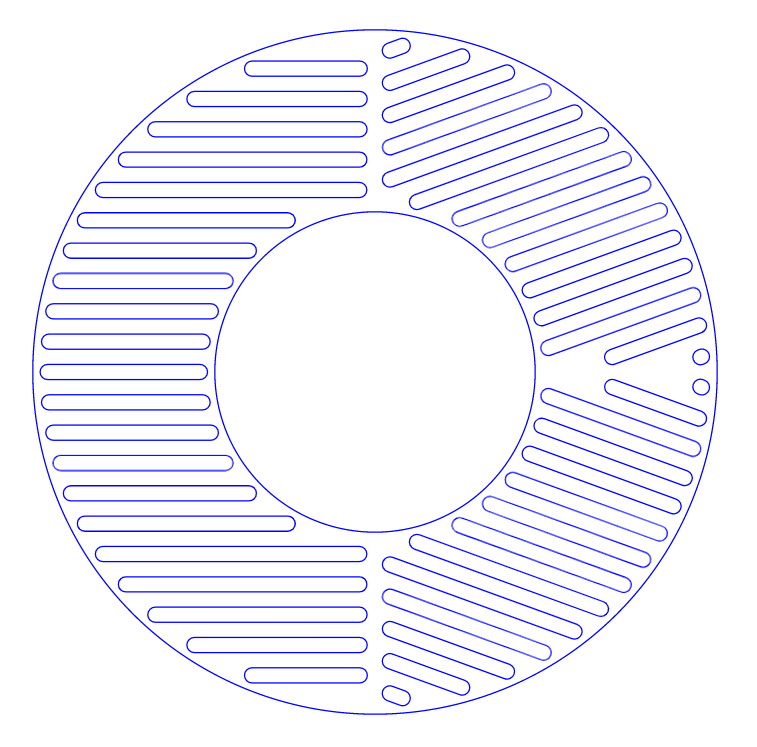

I took two strategies to extend a reliable Zigbee network to the workshop. First, I equiped the controller with a directional antenna, pointed towards the workshop. This puts as much RF energy as is practical in that direction. So far as I can tell, the existing Zigbee devices in the house suffered no change in signal strength. Apparently the side lobes on this antenna are good enough to cover the rest of the house. This antenna alone was enough to let one test device connect out in the workshop, at the full 125 foot distance, although the signal was down in the double digits, on the scale of 0-255.

The second leg of the strategy was to pile on the Zigbee router devices and try to saturate as much of the area between the house and workshop as possible with active routing devices.

So far, every AC powered Zigbee device I have seen is also a router. Two of the WiFi cloud controlled plugs I replaced are in the back yard. Actually, it was a single WiFi device with two individually controlled plugs that I replaced with two Zigbee devices, but who’s counting? While not directly between the controller and the workshop, they are outside the brick walls and at least slightly closer to the workshop, so they added to the mesh and signal path available to a device in the workshop.

Another item for control and automation is the wintertime heat lamp deployed in our wellhouse, which is between the house and workshop. When we built out the craft shack, I included an outdoor outlet specifically to help accomplish this task. I added another outside Zigbee plug here, which further stretched the mesh towards the workshop.

As of this writing, I have four Seedan smart plugs (which identify in Home Assistant as eWeLink smart plugs, neither of which can I find much authoritative info about online) which connect and operate flawlessly and are about $8 apiece as of this writing. Most of them don’t even have anything plugged into them; I am using them primarily as Zigbee range extenders and routers.

I added one inside the RV, which is parked between the house and workshop. This means that, physically, between the controller in the house and any one device in the workshop there are as few as zero and as many as four available devices to route that entire distance.

I added one in the living room, behind where our DirecTV receiver is. That is physically between the controller and the two backyard units and hopefully helps fill in any gaps there.

The other two are in the workshop, one by the door (it was the first test device deployed out there) and other on one of the workbench plugs, more as a second router for the the workshop as anything else. The long term plan will be to deploy some Zigbee light switches out there. There is a decent need for two sets of 2 way switches. I may also plug my little air compressor into one of the plug switches and use some kind of motion detector for presence to limit the compressor to running only when someone is actually out there.

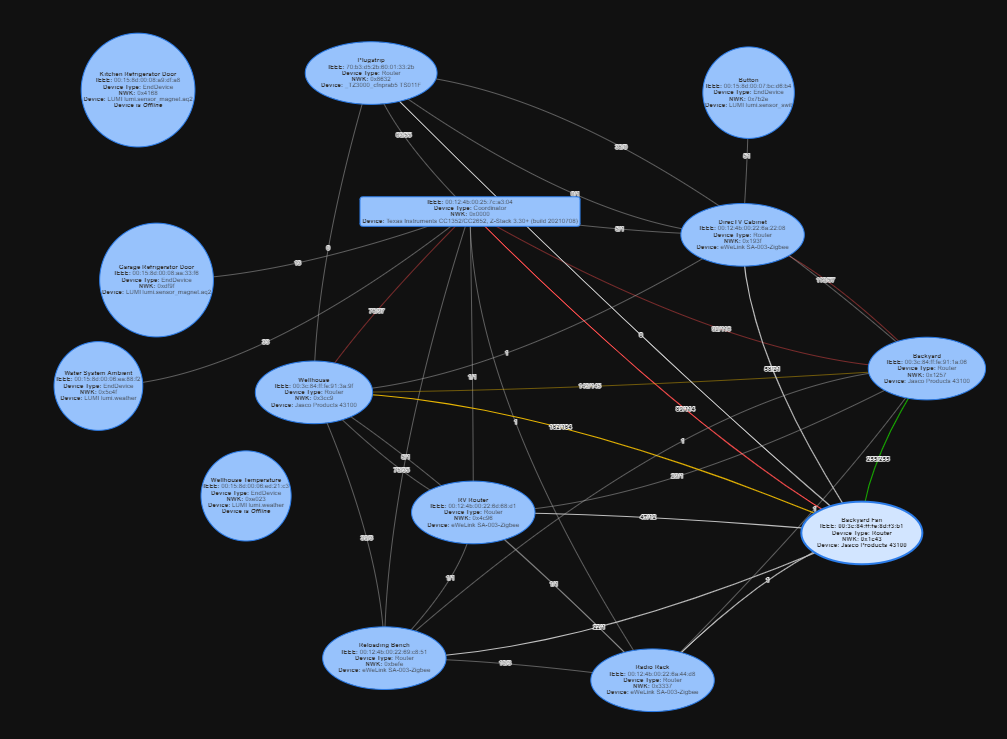

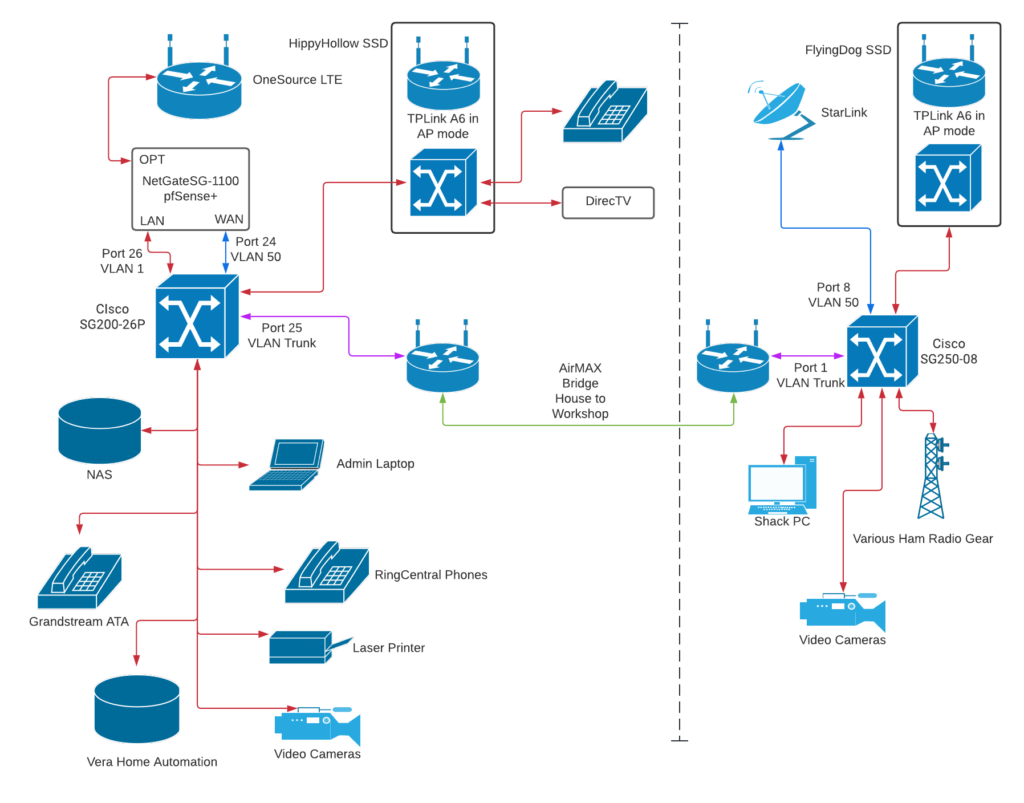

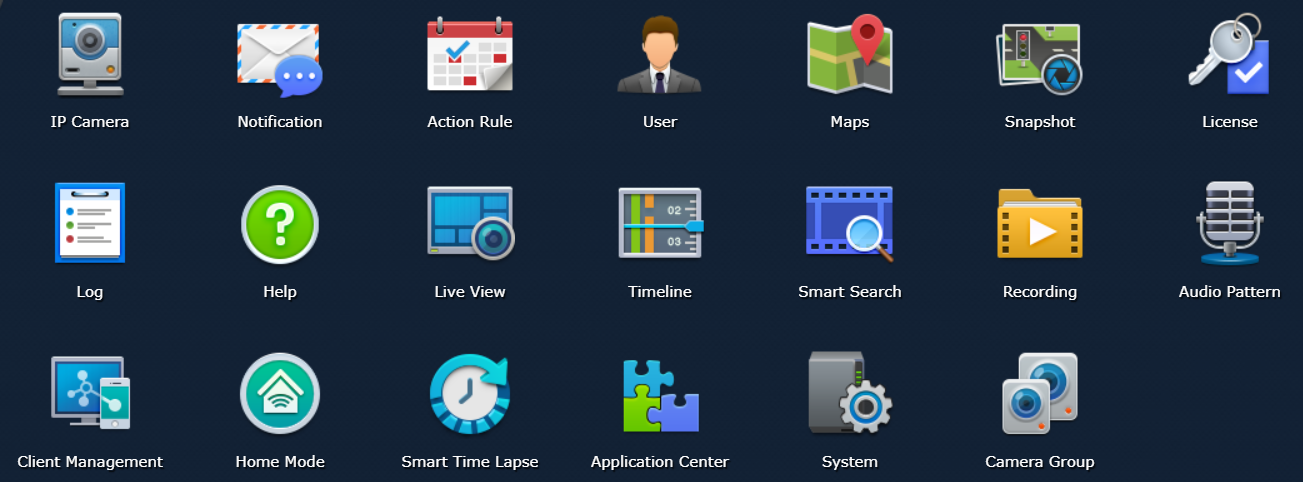

I digress somewhat. The Zigbee network as it stands today looks like this:

The mapping is automatically generated, though I did drag a few things around to make them fit the image a little better and to associate things somewhat physically.

There is, to me, an irritating feature of the map called Physics, which makes things move around on their own. It seems to seek to make the space between objects equal and consistent. It also seems to take off and do that just when I was reading something in the fine print on an object 🙂 Happily, there is a checkbox to turn it off.

The rectangle is the controller. Ovals are router devices, circles are battery devices. At the upper left is the Kitchen Refrigerator door, which for some reason shows offline in this map just now, but it is definitely working. Maybe an auto mapping anomaly. Shrug. More importantly, you can see the aforementioned complexity of the meshing that Zigbee builds between routers.

The color coding seems to be related to signal strength, red low, yellow lowish, & green great. Most links, however, show gray and numbers lower than red, which is mildly confusing, but generally those devices are working.

I was hoping that the battery units would form more than one path to the controller, but maybe I don’t understand the way they work just yet.

You may also note that I have a temperature sensor in the wellhouse. The wellhouse is a tiny metal building and even though the wellhouse plug router is only about 10 feet away, this temperature sensor does not work reliably at all. That may not be critical, but I do intend to automate based on the temperature *inside* the wellhouse, not just the the temperature outside. I will figure out something 🙂

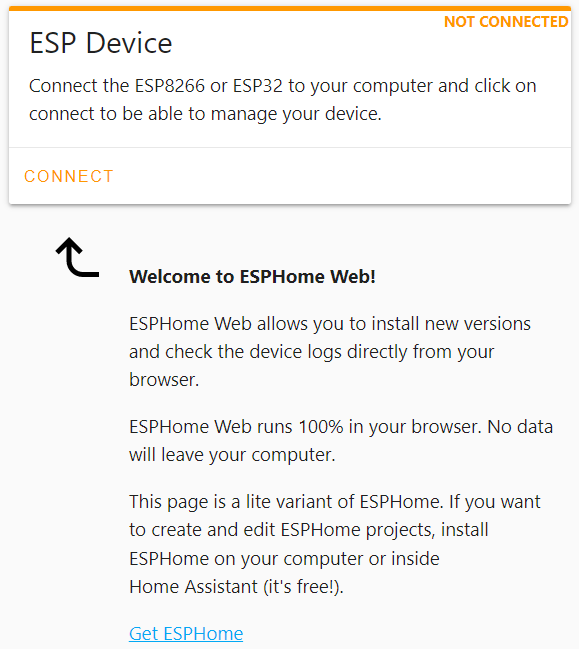

Up next, the very exciting world of ESPHome and I promise, the story about the Z-Wave debacle that I brought on myself.